1.

As Marcus Aurelius gathered his forces against German tribes in the second century AD, he summoned Claudius Galenus, an up-and-coming physician from Pergamum, to ride with him. Galen declined, politely and imaginatively, claiming a higher loyalty to “the contrary instructions of his personal patron god Asclepius.”1 This early instance of conscientious objection was accepted, it seems graciously. But in exchange for his indulgence, Marcus Aurelius ordered Galen to await his return and attend the health of his neophyte emperor son, the soon to be deranged Commodus.

Galen did his job rather too well, curing Commodus of an illness around 174 AD and unwittingly laying the ground for a murderous period of political instability some ten years or so later. Rome’s long-term loss was medicine’s great gain, for, as Galen later wrote,

During this time I collected and brought into a coherent shape all that I had learned from my teachers or discovered for myself. I was still engaged in research on some topics, and I wrote a lot in connection with these researches, training myself in the solution of all sorts of medical and philosophical problems.

In addition to being a fine scholar and a wise court physician, Galen was also the supreme polemicist of his day. The aggressive tendencies of his mother—“so bad-tempered that she would sometimes bite her maids”—provided a valuable store of endurance for Galen to draw on as he quarreled with his contemporaries. Indeed, his passion for conflict led him, at the age of twenty-eight, into the unusual role of physician to the gladiatorial school of Pergamum, a position, amid the flayed limbs, punctured chests, and eviscerated abdomens, that gave him a perfect vantage point for firsthand anatomical observation.

One of Galen’s philosophical preoccupations was to understand how doctors came to know what they did about healing. He lived at a time when there was no consensus about how doctors should acquire knowledge. Empiricists relied entirely on experience, while Rationalists depended on reason from a prespecified theory of causation. A third group, the Methodists, rejected both experience and causal theory, putting all illnesses down to a tension between the flow of bodily discharges and their constipation. Galen was a deft eclectic. He scrutinized opposing arguments, identified their flaws, erased erroneous logic, and combined what remained into a practicable clinical method. He wished to assert the primacy of clinical observation and to bind an integrated (Hippocratic, Platonic, Alexandrian) theory of medical knowledge with its practice. But Galen wanted to achieve his unique synthesis neither as a remote theoretician nor as someone who had a reputation for being merely a “word doctor”: “Rather, my practice of the art alone would suffice to indicate the level of my understanding.”

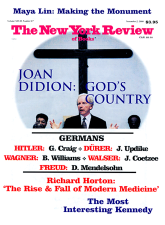

In The Rise and Fall of Modern Medicine James Le Fanu, a practicing London doctor, a prominent medical controversialist in the English press, and a person wholly dissatisfied with the huge power exerted by modern medical sects, has surveyed and systematized, processed and picked apart the past fifty years of medical discovery. Like Galen, he is frustrated by what he sees as the misleading ideologies of today’s widely accepted and lavishly praised medical epistemologists.

So just what has medicine achieved? If one believes the mainstream view of Western white male medical progress, it has been an astonishing success. The year 1999 saw many efforts to sum up what the editors of The New England Journal of Medicine have called “the astounding course of medical history over the past thousand years.”2 Medicine deserves such glorification, they said, because it “is one of the few spheres of human activity in which the purposes are unambiguously altruistic.” Well, quite possibly, provided that academic tenure is speedily secured, research grants are generously awarded, salaries stay ahead of inflation, teaching loads are progressively lightened, managed care organizations try harder to be respectfully flexible, and patients keep their lawyers at a distance.

Is it also true to say, as the prolific British historian Roy Porter has done, that Western medicine is “preoccupied with the self”? The narcissistic obsession with the body’s personal cosmos has produced, Porter insists, a compulsion to celebrate historical winners. He defends this constant reverence for success:

I do not think that “winners” should automatically be privileged by historians…but there is a good reason for bringing the winners to the foreground—not because they are “best” or “right” but because they are powerful.3

Other critics are less respectful of medicine’s traditions. Richard Gordon, the British author of Doctor in the House and fourteen amusing sequels, is an agreeably arch cynic. He prefers the view that

the history of medicine is not the testament of idealistic seekers after health and life…. The history of medicine is largely the substitution of ignorance by fallacies…. Medicine has persistently decked itself out in fashion’s shamming achievements, while staying miserably bare on masterly discoveries.4

Despite his witty debunking, Gordon’s personal résumé of pre-modern “masterly discoveries” largely conforms to the accepted medical canon. He includes William Harvey’s discovery of the circulation of the blood (1628), Edward Jenner’s exploitation of vaccination (1796), the discovery of anesthesia (in the 1840s), the elucidation of endocrine function by Claude Bernard (1850s), Charles Darwin’s theory of evolution (1859), Lord Lister’s invention of surgical antisepsis with carbolic acid (1865), the launch of bacteriology in the 1880s by Louis Pasteur and Robert Koch, the discovery of X-rays by Wilhelm Roentgen (1895), and Freud’s early forays into psychiatry. Great achievements, every one of them, even if we might quibble about Freud’s staying power.

Advertisement

To which, after consulting antiquarian medical book dealers, physician-collectors of historical memorabilia, and doctors working at two well-established West Coast medical schools, the respected clinicians Meyer Friedman and Gerald Friedland added Andreas Vesalius’s anatomic sketches (1543) and Antony Leeuwenhoek’s visualization of bacteria (1676).5 Friedman and Friedland also single out the work of William Harvey for special honor since it was he who “introduced the principle of experimentation for the first time in medicine.”

In the twentieth century, medicine underwent something of a bifurcation. The physician Kerr White, a decisive figure in the study of American public health, has identified 1916 as the crucial point of separation between a medicine concerned with the health of individuals and one concerned with the health of populations.6 It was in 1916 that the Rockefeller Foundation decided to create schools of public health independent of schools of med-icine. The result was an abandonment of the social impulse within American medical education. This division contributed to the origination of two distinct histories of Western medicine, histories that had until then been indivisible.

By the end of the century, the separation of public health from clinical science was complete and officially recognized to be so. The “ten great public health achievements” of the twentieth century, according to the US Centers for Disease Control and Prevention, are vaccination, motor-vehicle safety, safer workplaces, control of infectious diseases, declines in deaths from coronary heart disease and stroke, safer and healthier foods, healthier mothers and babies, family planning, fluoridation of drinking water, and recognition of tobacco as a health hazard.7 Compare these simple social milestones with those feats of technical discovery celebrated annually since 1901 in the Nobel Prize in Physiology or Medicine. All but a few distinguished laureates have come from the laboratory rather than the clinic, and few prize winners reflect the tradition of public health.

This leaning of the Nobel committee to basic science has caused consternation among many in medicine. For some years, unsuccessfully, an annual letter-writing campaign has taken place to persuade the reluctant Swedes to give their prize to Richard Doll, the man who, among other achievements, codiscovered the link between smoking and lung cancer. He is eighty-seven years old and time is ebbing away. Will 2000 be his year?

Le Fanu’s thesis takes in this strange parallel evolution of laboratory science and public health, and he engagingly refuses to submit to convention about who should be applauded for their contributions to each. About Doll, for example, whom he agrees is “one of the world’s most eminent cancer epidemiologists,” Le Fanu is scathing. Doll’s 1981 treatise, The Causes of Cancer, is found badly wanting: it “may look impressive, but appearances can be deceptive…. Intellectual rigor…is conspicuous only by its absence.”

I hope that Professor Ralf Pettersson, who chairs this year’s Nobel committee, does not read The Rise and Fall of Modern Medicine.

2.

In the fifty years after World War II, Le Fanu argues, the Western world has undergone a “unique period of pro-digious intellectual ferment.” Drawing mainly on British and US experience, he goes on to select his personal ten definitive moments of discovery, arbitrarily he admits, from this half-century. But he also begins with a warning. Although there has been startling progress, medicine is now facing an era of perplexing stagnation. Doctors are disillusioned by their profession; they increasingly have to deal with “the worried well” rather than the genuinely sick; they have to contend with the puzzling and, for many physicians, irritating popularity of alternative medicine; and the costs of diagnosis and treatment are escalating at a rate that is not matched by advances in knowledge. From the 1970s onward, there has been “a marked decline” in innovation. And, worst of all, doctors have experienced a “subversion, by authoritarian managers and litigious patients, of the authority and dignity of the profession.”

The purpose of retelling standard histories of drug development (penicillin), physiology (cortisone), dramatic treatments (open-heart surgery), and identification of major causes of disease (the bacterium that causes peptic ulcers), as Le Fanu does in his “Lengthy Prologue,” is to find common patterns in the social conditions that delivered these discoveries. Four characteristics appear time and time again, none of which support the notion that medical progress is a rational enterprise. Le Fanu’s reading of the postwar history of medicine marks him, in Galenic terms, as an extreme and unforgiving empiricist.

Advertisement

First, medicine must pay a great debt to chance. In cancer treatment, for example, “virtually all [drugs] owe their origins to chance observation or luck.” They were mostly “stumbled upon,” often by “accident.” “The common theme,” Le Fanu concludes, “running through the discovery of these cancer drugs was that there was no common theme.” With respect to treatment of the childhood cancer acute lymphoblastic leukemia (ALL), “the most impressive achievement of the post-war years,”

the cure of ALL is proof of the power of science to solve the apparently insoluble. But science can certainly not claim all the credit, for many aspects of the cure of ALL remain frankly inexplicable.

Second, straightforward observation rather than intricate experimentation often produced the significant step forward when an impediment presented itself. Alexander Fleming’s fortuitous scrutiny of a discarded specimen plate, which showed a contaminating mold inhibiting bacterial growth—thus preparing the way for the discovery of penicillin and other antibiotics—required only an alert eye. Third, insight, not technological wizardry, can open hidden doors to understanding. In 1949, while studying ways to treat shock, Henri Laborit, a French naval surgeon, gave his patients promethazine, an antihistamine. He noticed that the drug produced not only sleep but also pain relief, so much so that a shot of morphine was unnecessary. He noted that “antihistamines produce a euphoric quietude… our patients are calm, with a restful and relaxed face.” From this careful description came the realization that promethazine and similar agents might treat disordered and agitated states of mind. The use of chlorpromazine as a treatment for schizophrenia soon followed.

Finally, progress depends on personal human drive, vision, and the belief in being right, irrespective of distracting, even fatal, setbacks. The best example of sheer stubbornness is the work of Robert Edwards and Patrick Steptoe, who were the first to deliver a “test-tube” baby in 1978. Le Fanu believes that Edwards faced early and “bitter disappointment that would have convinced any lesser person to give up in despair.” A request for research funding to study in-vitro fertilization was rejected on grounds of distasteful ethics and lack of proven success in animals. When Steptoe and Edwards finally did get the official go-ahead, their first twenty or so attempts over two years ended in dispiriting failure. The will to go on in the face of such unequivocal defeat showed, Le Fanu perceives with hindsight, great “moral courage.”

After this, Le Fanu examines the development of new disciplines that grew up around these discoveries. For instance, the new science of pharmacology fostered extensive commercial programs of therapeutic drug development. These drugs were not made after working out the details of how a cell or tissue functioned. Le Fanu presses his argument that heavily financed chemistry “blindly and randomly” produced “remedies that had eluded doctors for centuries.” In sum, “the dynamics of the therapeutic revolution owed more to a synergy between the creative forces of capitalism and chemistry than to the science of medicine and biology.”

The business of medicine also legitimized technology as a means to solve specific problems. Renal failure (dialysis), intestinal disorders (endoscopy), deafness (the cochlea implant), heart rhythm disturbances (pacemakers), and failures of vital body systems (intensive care), together with new means to look at parts of the body that previously only revealed themselves in the autopsy room—all helped to make doctors feel that their calling was being transformed into something quite miraculous. And here, according to Le Fanu, was the seed of subsequent decline. For doctors “came to believe their intellectual contribution to be greater than it really was, and that they understood more than they really did.”

The peak of their achievement came in the 1970s. But by the end of that decade, Le Fanu asks, “where were the new ideas?” He sees this “pivotal moment” as one that “has until now hardly been commented on.”

Drug innovation waned once the limiting effects of burdensome government regulation kicked in after the thalidomide tragedy. A more rational and less random approach to drug discovery was “much less fruitful than was hoped.” Chance allowed for the unexpected; science did not. Diagnostic techniques came to dominate clinical care. Patients were commonly overinvestigated by batteries of tests, “downgrading the importance of wisdom and experience in favor of spurious objectivity.” But Le Fanu’s target is not the machinery of medicine:

The culprit is not technology itself, but the intellectual and emotional immaturity of the medical profession, which seemed unable to exert the necessary self-control over its new-found powers.

As doctors misunderstood and misused the tools available to them, they passed the responsibility for research to a new professional cadre of medical scientists. The “fall” was by now irreversible. Failures stacked up in all spheres of medicine, laboratory and clinical.

Basic science came to be dominated by molecular genetics. The discovery of DNA spawned new technologies that led to research into genetic engineering, genetic screening, and gene therapy. Naive investors, often ignorant about the wafer-thin credibility of the research they were paying for, poured millions into biotechnology companies. The result, according to Le Fanu, has been that “the impression of progress has not been vindicated by anything resembling the practical benefits originally anticipated.”

Worse, gene therapy has largely turned out to be “not only expensive but useless.” Why has the new genetics so far failed medicine? Le Fanu answers that “genetics is not a particularly significant factor in human disease.” And, in any case, genes are “complex,” “unpredictable,” and “perverse.” They are not amenable to easy understanding. Their involvement in disease is largely “incomprehensible.”

And what of the clinic? Le Fanu believes the huge error that doctors made was to be “seduced” by “the Social Theory,” an approach to the study of disease by which exposure to environmental hazards or to dangerous forms of human behavior are sought as the possible causes of diseases. These epidemiological inquiries suggested ways of preventing illness by altering the exposure or modifying a behavior.

Smoking is an obvious example. Le Fanu has no doubts about the link between smoking and lung cancer; but he believes the success of Richard Doll’s early work has rendered doctors and the public prey to the foolish view that many diseases are caused by unhealthy lifestyles. Le Fanu’s key exhibit for the prosecution is the dietary hypothesis of heart disease—namely, that what one eats will determine one’s risk of a heart attack. This idea, according to Le Fanu, is “the great cholesterol deception.” Accepting the mechanistic link between cholesterol and heart disease, he vehemently denies that diet can be counted on to cure it. He applies the same skepticism to similar claims about diet and cancer (“quackery”). Without any qualification, Le Fanu concludes that the Social Theory “is in error in its entirety” (his italics). He accuses epidemiologists of “deceit,” “idealist utopianism,” and “lack of insight.” The consequence is that the epidemiological perspective

has wasted hundreds of millions of pounds in futile research and health-education programmes while justifying the imposition of costly regulations to reduce yet further the minuscule levels of pollution in air and water. And to cap it all, it does not work. The promise of the prevention of thousands of deaths a year has not been fulfilled.

The fall of medicine was complete.

3.

Le Fanu’s criticism of wildly exaggerated claims and expectations for the new genetics is shared by many of those who are leaders in the field. David Weatherall, who runs the Institute of Molecular Medicine at the University of Oxford, pointed out only last year that

the remarkable complexity of the genotype-phenotype relationship has undoubtedly been underestimated during the early period of the revolution in the biomedical sciences that followed the DNA era. It has led to many statements being made about the imminence of accurate predictive genetics that are simply not true…. It is far from certain that we will ever reach a stage in which we can accurately predict the occurrence of some of the common disorders of Western society at any particular stage in an individual’s life.8

Research tends to support Le Fanu’s view that genes are mostly a minor determinant of human disease. Studies of twins enable investigators to explore the genetic and environmental contributions to disease (although they tell us nothing about important interactions between the two). In one recent report, for example, evidence from twins showed “that the overwhelming contributor to the causation of cancer” was not genetic. If genes play an important part, then the risk of cancer should be substantially greater in identical twins than in nonidentical twins, and this was not the case.9 For breast cancer, only 27 percent of all causes can be traced to genetic factors. Prostate cancer was the disease in which genes had the most important part to play (42 percent of risk was explained by genes).

Genetic fatalism about disease is a myth that needs to be exposed once and for all. It is very unlikely that a simple and directly causal link between genes and most common diseases will ever be found. This message is not one that many scientists want the public to hear; continued political support for funding genetic research depends on persistent public credulity.

The prevailing if rather private realism among some scientists about the contribution of genetics to our understanding of human disease makes the recent hoopla about a reported first draft of the human genome all the more difficult to accept. An editorial writer for The Times of London, under the nonsensical headline “Secrets of Creation,” concluded with hundreds of other journalists that

this is a breathtaking moment for genetic science, for human health, even for philosophy…. The greatest scientific journey of this century starts here, with this directory; as its alphabet is decoded, the prediction, treatment and understanding of disease should be revolutionised…. It could, in particular, revolutionise the treatment of cancer, which is caused by malfunctioning genes.10

Not so. The fact is that progress in exploiting the genome will be painfully slow. Its importance lies not in the existence of a working draft of the genome—by itself, this tells us very little—but rather in its opening up the possibility for sequencing multiple copies of the human genome to discover variations among individuals in health and in disease. Even knowing this variation—a precondition for practical application—is of limited value, since the chief task of research must now be to study how variations in gene sequences interact with different environmental exposures, and gradations of each exposure, so as to alter the conditions of risk. I doubt that we will get far along this path during my lifetime (I am thirty-eight).

Having emphasized the importance of humility in the face of unfettered journalistic hyperbole, I should also mention the isolated signs of small steps forward. Le Fanu mocks the lack of progress in gene therapy since the first report of successful treatment for a type of inherited severe combined immunodeficiency disorder (SCID) in 1990. The recipient of this intervention, Ashanti de Silva, is now thirteen years old. She received eleven infusions of gene-corrected cells ten years ago and she has, in the words of her doctor, “thrived” ever since.11 Moreover, during the past decade, the techniques for giving new genes to patients with SCID have improved. In a recent report, the delivery of normal genes to two infants with SCID corrected their abnormal immune function. Ten months later, both children were living at home, growing and developing normally without any side effects. A third child has also been treated and was at home, fit and well, four months later.12 While it is far too early to draw firm conclusions, technical improvements in gene delivery do seem to translate into clinical benefit. One should still be cautious here: SCID is a rare condition amenable to correction with a single gene. Most common diseases will not be so easy to deal with.

Le Fanu’s opposition to the social theory of disease is, if anything, even greater than his skepticism of genetics. His vehement condemnation of the social theory is a regular subject in the columns he writes for the London Sunday Telegraph.13 Epidemiological studies produce, he claims, “spurious statistical associations whose contribution to useful knowledge is zero.” How has this “nonsense” come to be so ingrained in medicine? Le Fanu explains:

First, this type of study is easy to do: it takes no special expertise to switch on a computer, trawl through the social habits of a large group of people and come up with a “new” finding. Second, they have the veneer of scientific objectivity, with lots of figures and statistics whose publication in a journal is visible evidence of the researcher’s productivity.

Maybe. But it is Le Fanu’s notions of causation that are more at fault here. Epidemiology aims to uncover associations. Sometimes these associations, as Le Fanu has to admit, turn out to be true causes—as with smoking and lung cancer. But at other times, associations hide more subtle relationships. Perhaps red wine, let’s say, includes an as yet unidentified ingredient that explains why its consumption is linked to a particular outcome, such as a lower risk of heart disease. The idea that an association is equivalent to a cause is a fundamental error of epidemiological interpretation; but this does not mean it is futile to report associations. The conflicting reports of risk associations—for instance, that alcohol is or is not good for you—reflect the to and fro of scientific debate, not some essential flaw in the methods being used. What human science produces the entirely unequivocal and unchallengeable results that Le Fanu so yearns for?

He also expresses heartfelt discontent that the medical research industry, despite vast government and private investment, has so few certainties to show for its endeavors. But I think his conception of the research process is seriously mistaken. Clinical research never produces definitive conclusions for the simple reason that it depends on human beings, maddeningly variable and contrary subjects. Although medical science is reported as a series of discontinuous events—a new gene for this, a fresh cure for that—in truth it is nothing more than a continuous many-sided conversation whose progress is marked not by the discovery of a single correct answer but by the refinement of precision around a tendency, a trend, or a probability.

Advances in diagnosis and treatment depend on averaging the results from many thousands of people who take part in clinical trials. The paradoxical difficulty is that these averages, although valid statistically, tell us very little about what is likely to take place in a single person. Reading the findings of medical research and combining their deceptively exact numbers with the complexities of a patient’s circumstances is more of an interpretative than an evidence-based process. The aim is to shave off sharp corners of uncertainty, not to search for a perfect sphere of indisputable truth that does not and never could exist. In this way the process of research is often more important than the end result. It does not have the drama and heroism that Le Fanu dwells on in his ten definitive moments. But these moments are not typical of what most medical researchers do.

What should be clear is that Le Fanu is on shaky ground in rejecting the argument that changes in lifestyle have contributed to the rise and fall of heart disease in the US and Canada since the 1950s. He cites inconsistent findings that seem to prove a widespread confusion surrounding this orthodoxy. Superficially, his case is fair because risk factors related to lifestyles have usually been studied one at a time, providing a chaotic and conflicting picture overall. One recent study done at Harvard, however, has attempted to circumvent this problem by looking at the interplay of risk factors in a single large group of middle-aged women. The results will not please Le Fanu.

The small group who collectively did not smoke, remained reasonably thin, drank alcohol moderately, exercised regularly, and ate a diet rich in fiber and low in saturated fat reduced their risk of heart disease during a fourteen-year period by over 80 percent. Whichever way you interpret these data, how you live influences how you die. The Harvard epidemiologists conclude that their findings “support the hypothesis that adopting a more healthful lifestyle could prevent a substantial majority of coronary disease events in women.”14 The social theory of disease may not explain everything about life and death, but it would be wrong to cast epidemiology into oblivion just yet.

4.

Le Fanu ends his review of medicine’s demise in typically unflinching style: “By the 1970s much of what was ‘do-able’ had been done.” His interpretation of the past fifty years is that medical science has reached its natural limit:

The main burden of disease had been squeezed towards the extremes of life. Infant mortality was heading towards its irreducible minimum, while the vast majority of the population was now living out its natural lifespan to become vulnerable to diseases strongly determined by ageing.

Any solution to the diseases that now affect human longevity

means discarding the intellectual falsehoods of The Social Theory and the intellectual pretensions of The New Genetics…. The simple expedient of closing down most university departments of epidemiology could both extinguish this endlessly fertile source of anxiety-mongering while simultaneously releasing funds for serious research.

Le Fanu misreads the ills of present-day medicine. But his approach to the discoveries of the past does bring into clear relief important social changes in the way medical research is done today, changes that should influence professional, public, and political attitudes to contemporary medicine.

Clinical research had become highly specialized, often eliminating the ordinary doctor from the process of day-to-day investigation. This upheaval in the way research was done accelerated during the 1970s, at the time Le Fanu identifies as being the start of medicine’s precipitous fall. There was a hiatus in dramatic discoveries, it is true, but that now seems to be coming to an end. As research moved from the bedside to the laboratory, doctors in clinics were left empty-handed, with little to contribute to the production of medical knowledge. But a far more important instrument was being given to them—the randomized controlled clinical trial.

The clinical trial is a human experiment, enabling physicians to study the safety and effectiveness of interventions, whether in the form of drugs, devices, or prescribed changes in behavior. According to the Declaration of Helsinki, in principle a trial must be sanctioned by an ethics committee and the patients involved must give informed consent to taking part in it. These conditions are not always met, particularly in the developing world, and a furious debate is currently taking place over whether the declaration should be revised. But still, the randomized trial has become the foundation of current clinical knowledge. Clinicians are increasingly being drawn into trial networks. Far from being divorced from medical research, doctors are now back at the center of its most powerful new means of discovery.

The centrality of clinical trials to twenty-first-century medicine means that further definitive moments, unlike those in earlier times, may not always be instances of positively beneficial discovery. “Negative” results—proving that a drug either does not work or causes more harm than good—can be equally if not more important in shaping medical knowledge. Trials allow scientific concepts to be tested experimentally and these theories can sometimes be proven embarrassingly mistaken.

For example, stroke is a leading cause of death and disability in the Western world. In the early 1990s animal experiments suggested that brain damage after a stroke occurred when chemicals released during an episode of oxygen starvation overstimulated surrounding neurons. A theory was developed and tested in the laboratory in which blocking the effects of these chemicals protected the brain from further harm. Recently reported trials have shown this carefully worked out theory to be either wrong or, at best, seriously oversimplified.15

The new genetics is likely to expand, not contract, the potential for drug discovery. Genes code for proteins. Now that most of the human genome sequence is available to us, the total protein complement of the human cell (the proteome) is within reach. Since proteins control most cell processes, they are important natural targets for drugs. Not only will the genome and proteome yield new sites for drug action, but finding the pattern for the ways genes and proteins are expressed in each disease state will enable much more precise classification of diseases. Subtle differences in diseases, notably cancers, which were previously thought to be homogenous pathological entities, are now being found, with significant implications for prognosis and treatment. Currently accepted disease classifications will soon be torn up. For example, one type of blood cancer that previously had an unpredictable outcome after treatment has recently been shown to consist of two separate categories of disease, categories that were distinguished from one another by their different molecular fingerprints. These two types of cancer had clearly distinct clinical outcomes. The confusion caused by lumping together two diseases as one was finally resolved.16

As these developments change the semantics of human disease, so they will reveal ever more clearly the vast inequalities in health between North and South. These differences have been with us for many years, but they have been all too openly emphasized by the excruciating brutality of the HIV/AIDS epidemic. The introduction of highly active antiretroviral treatment has cut the rate of illness by over 90 percent for the two million people living with AIDS in the developed world. But for the thirty-two million HIV-infected people living in poorer countries, access to these drugs is denied because of their high cost. According to a recent report from Médecins Sans Frontières, “In most poor countries the prices of HIV drugs condemn people with AIDS to premature death.”17 Contrary to Le Fanu, then, the major issue in medicine is not one of maintaining the past pace of discovery, but of making sure there is equitable access, throughout the world, to the discoveries we have already made.

There is one other aspect of medicine, hardly touched on by Le Fanu, which has probably caused deeper and more impassioned disagreement among today’s medical sects than any other issue during the past two decades. Screening for disease should bring about the successful convergence of epidemiology and clinical medicine. A group of people apparently free from disease is screened for a disorder that, once found, is treated. Since the condition is identified early, the chances of cure are high. Mammography, the Pap test, colonoscopy, prostate specific antigen—all of these investigations should throw up early warning signs of potentially fatal illnesses. Controversy is bound to arise over what to do when a result is positive. But these skirmishes are nothing compared with the terrifying choices that will be presented when genetic tests become more widely available.

Women with the gene mutations BRCA1 or BRCA2, which were first reported in 1994 and 1995, respectively, have a lifetime risk of breast cancer ranging up to 85 percent. If such a mutation is discovered in a woman, what should she do? She might have no identifiable disease at the time the mutation is found. Should she choose what may, for her, lead to peace of mind (prophylactic mastectomy) or should she choose regular surveillance? The little research that has been done suggests that about half of women with a risk mutation will choose prophylactic surgery, and that those who do so tend to be parents and of younger age.18 Genetic testing poses immensely difficult life choices for women at moments when the conflict between fear and apparent good health is unresolvable. Fear seems to drive the decision for surgery. As a leading team in genetic research on breast cancer recently wrote:

Prophylactic mastectomy is a mutilating and irreversible intervention, affecting body image and sexual relations. There is much concern about the potential psychological harm of DNA testing for BRCA1 and BRCA2 and prophylactic surgery, in particular mastectomy. However, in our experience and that of others, women who had mastectomy after adequate counselling rarely express regret, instead they are relieved from fear of cancer.

Finally, the separation of clinical medicine from public health seems as intractable as ever. And yet, oddly, it may be here that Le Fanu’s twin evils of genetics and social theory might find a useful meeting point. If one knows that a gene is in some way related to a disease, the study of those genes in populations can help one to plan the health services that will be needed to take care of those affected.

That is exactly what Shanthimala de Silva and her colleagues have done in Sri Lanka.19 They used chemical fingerprints for thalassemia, a genetically determined blood disorder causing severe anemia, to calculate that the island had over two thousand persons requiring treatment. To take appropriate care of these people would require about 5 percent of the country’s total health budget—$5 million, a large sum of money for a small nation. Yet it would be better to know what costs will be involved and to try to raise the money domestically and internationally than to ignore the disease. These findings have implications throughout the entire Indian subcontinent and Southeast Asia, where the gene frequencies for thalassemia are even higher than they are in Sri Lanka.

By improving the clarity of questions that medical practice poses and by diminishing the uncertainty of our answers to those questions, geneticists and social theorists have not damaged the “intellectual integrity” of medicine, as Le Fanu claims. They have simply blurred old and reassuring certainties. Le Fanu longs for the past authority enjoyed by doctors and for the deference that such authority demanded from patients. He berates these researchers for their erosion of medicine’s moral base. But just as scientists do not have ultimate control, despite their intense efforts to the contrary, over the interpretations others place on their work, so it seems ludicrous to impose a moral imperative on their motivations. Medical science is just as self-serving as any other branch of human inquiry. To claim a special moral purpose for medicine or even a beneficent altruism is simply delusional.

Many doctors do feel under pressure from the bureaucracy of managed care, the opportunism of zealous litigants, and the overwhelming weight of new knowledge that they are expected to assimilate. With all of these extraneous forces, is Le Fanu correct when he concludes that today “medicine is duller”? I doubt it. Medicine is as unpredictable, baffling, ambiguous, fallible, and absurd as it ever was.

This Issue

November 2, 2000

-

1

Galen, “My Own Books,” in Selected Works, translated by P.N. Singer (Oxford University Press, 1997), p. 8.

↩ -

2

The Editors, “Looking Back on the Millennium in Medicine,” The New England Journal of Medicine, January 6, 2000, p. 42.

↩ -

3

Roy Porter, The Greatest Benefit to Mankind (HarperCollins, 1997), p. 12.

↩ -

4

Richard Gordon, The Alarming History of Medicine (Mandarin, 1993), pp. 1-2.

↩ -

5

Meyer Friedman and Gerald Friedland, Medicine’s 10 Greatest Discoveries (Yale University Press, 1998), pp. 1, 37.

↩ -

6

Kerr L. White, Healing the Schism: Epidemiology, Medicine, and the Public’s Health (Springer-Verlag, 1991), p. xi.

↩ -

7

See Morbidity and Mortality Weekly Report, April 2, 1999, pp. 241-243.

↩ -

8

David Weatherall, “From Genotype to Phenotype: Genetics and Medical Practice in the New Millennium,” Philosophical Transactions of the Royal Society of London, Vol. 354, B (1999), p. 2008.

↩ -

9

See Paul Lichtenstein et al., “Environmental and Heritable Factors in the Causation of Cancer,” The New England Journal of Medicine, July 13, 2000, pp. 78-85.

↩ -

10

The Times of London, June 23, 2000.

↩ -

11

See W. French Anderson, “The Best of Times, the Worst of Times,” Science, April 28, 2000, pp. 627-629.

↩ -

12

See Marina Cavazzana-Calvo et al., “Gene Therapy of Human Severe Combined Immunodeficiency (SCID)- X1 Disease,” Science, April 28, 2000, pp. 669-672.

↩ -

13

See, for example, James Le Fanu, “Scientists Who Should Carry a Health Warning,” Sunday Telegraph, July 9, 2000, p. 2.

↩ -

14

Meir J. Stampfer et al., “Primary Prevention of Coronary Heart Disease in Women Through Diet and Lifestyle,” The New England Journal of Medicine, July 6, 2000, p. 21.

↩ -

15

See Kennedy R. Lees et al., “Glycine Antagonist in Neuroprotection in Patients with Acute Stroke,” The Lancet, June 3, 2000, pp. 1949-1954.

↩ -

16

See Ash A. Alizadeh et al., “Distinct Types of Diffuse Large B-Cell Lymphoma Identified by Gene Expression Profiling,” Nature, February 3, 2000, pp. 503-511.

↩ -

17

Carmen Perez-Casas, HIV/AIDS Medicines Pricing Report (Médecins Sans Frontières, 2000).

↩ -

18

E.J. Meijers-Heijboer et al., “Presymptomatic DNA Testing and Prophylactic Surgery in Families with a BRCA1 or BRCA2 Mutation,” The Lancet, June 10, 2000, p. 2019.

↩ -

19

See Shanthimala de Silva et al., “Thalassaemia in Sri Lanka,” The Lancet, March 4, 2000, pp. 786-791.

↩