I

Throughout the history of the study of man there has been a fundamental opposition between those who believe that progress is to be made by a rigorous observation of man’s actual behavior and those who believe that such observations are interesting only in so far as they reveal to us hidden and possibly fairly mysterious underlying laws that only partially and in distorted form reveal themselves to us in behavior. Freud, for example, is in the latter class, most of American social science in the former.

Noam Chomsky is unashamedly with the searchers after hidden laws. Actual speech behavior, speech performance, for him is only the top of a large iceberg of linguistic competence distorted in its shape by many factors irrelevant to linguistics. Indeed he once remarked that the very expression “behavioral sciences” suggests a fundamental confusion between evidence and subject matter. Psychology, for example, he claims is the science of mind; to call psychology a behavioral science is like calling physics a science of meter readings. One uses human behavior as evidence for the laws of the operation of the mind, but to suppose that the laws must be laws of behavior is to suppose that the evidence must be the subject matter.

In this opposition between the methodology of confining research to observable facts and that of using the observable facts as clues to hidden and underlying laws, Chomsky’s revolution is doubly interesting: first, within the field of linguistics, it has precipitated a conflict which is an example of the wider conflict; and secondly, Chomsky has used his results about language to try to develop general anti-behaviorist and anti-empiricist conclusions about the nature of the human mind that go beyond the scope of linguistics.

His revolution followed fairly closely the general pattern described in Thomas Kuhn’s The Structure of Scientific Revolutions: the accepted model or “paradigm” of linguistics was confronted, largely by Chomsky’s work, with increasing numbers of nagging counterexamples and recalcitrant data which the paradigm could not deal with. Eventually the counter-examples led Chomsky to break the old model altogether and to create a completely new one. Prior to the publication of his Syntactic Structures in 1957, many, probably most, American linguists regarded the aim of their discipline as being the classification of the elements of human languages. Linguistics was to be a sort of verbal botany. As Hockett wrote in 1942, “Linguistics is a classificatory science.”1

Suppose, for example, that such a linguist is giving a description of a language, whether an exotic language like Cherokee or a familiar one like English. He proceeds by first collecting his “data,” he gathers a large number of utterances of the language, which he records on his tape recorder or in a phonetic script. This “corpus” of the language constitutes his subject matter. He then classifies the elements of the corpus at their different linguistic levels: first he classifies the smallest significant functioning units of sound, the phonemes, then at the next level the phonemes unite into the minimally significant bearers of meaning, the morphemes (in English, for example, the word “cat” is a single morpheme made up of three phonemes; the word “uninteresting” is made up of three morphemes: “un,” “interest,” and “ing”), at the next higher level the morphemes join together to form words and word classes such as noun phrases and verb phrases, and at the highest level of all come sequences of word classes, the possible sentences and sentence types.

The aim of linguistic theory was to provide the linguist with a set of rigorous methods, a set of discovery procedures which he would use to extract from the “corpus” the phonemes, the morphemes, and so on. The study of the meanings of sentences or of the uses to which speakers of the language put the sentences had little place in this enterprise. Meanings, scientifically construed, were thought to be patterns of behavior determined by stimulus and response; they were properly speaking the subject matter of psychologists. Alternatively they might be some mysterious mental entities altogether outside the scope of a sober science or, worse yet, they might involve the speaker’s whole knowledge of the world around him and thus fall beyond the scope of a study restricted only to linguistic facts.

Structural linguistics, with its insistence on objective methods of verification and precisely specified techniques of discovery, with its refusal to allow any talk of meanings or mental entities or unobservable features, derives from the “behavioral sciences” approach to the study of man, and is also largely a consequence of the philosophical assumptions of logical positivism. Chomsky was brought up in this tradition at the University of Pennsylvania as a student of both Zellig Harris, the linguist, and Nelson Goodman, the philosopher.

Chomsky’s work is interesting in large part because, while it is a major attack on the conception of man implicit in the behavioral sciences, the attack is made from within the very tradition of scientific rigor and precision that the behavioral sciences have been aspiring to. His attack on the view that human psychology can be described by correlating stimulus and response is not an a priori conceptual argument, much less is it the cry of an anguished humanist resentful at being treated as a machine or an animal. Rather it is a claim that a really rigorous analysis of language will show that such methods when applied to language produce nothing but false-hoods or trivialities, that their practitioners have simply imitated “the surface features of science” without having its “significant intellectual content.”

Advertisement

As a graduate student at Pennsylvania, Chomsky attempted to apply the conventional methods of structural linguistics to the study of syntax, but found that the methods that had apparently worked so well with phonemes and morphemes did not work very well with sentences. Each language has a finite number of phonemes and a finite though quite large number of morphemes. It is possible to get a list of each; but the number of sentences in any natural language like French or English is, strictly speaking, infinite. There is no limit to the number of new sentences that can be produced; and for each sentence, no matter how long, it is always possible to produce a longer one. Within structuralist assumptions it is not easy to account for the fact that languages have an infinite number of sentences.

Furthermore the structuralist methods of classification do not seem able to account for all of the internal relations within sentences, or the relations that different sentences have to each other. For example, to take a famous case, the two sentences “John is easy to please” and “John is eager to please” look as if they had exactly the same grammatical structure. Each is a sequence of noun-copula-adjective-infinitive verb. But in spite of this surface similarity the grammar of the two is quite different. In the first sentence, though it is not apparent from the surface word order, “John” functions as the direct object of the verb to please; the sentence means: it is easy for someone to please John. Whereas in the second “John” functions as the subject of the verb to please; the sentence means: John is eager that he please someone. That this is a difference in the syntax of the sentences comes out clearly in the fact that English allows us to form the noun phrase “John’s eagerness to please” out of the second, but not “John’s easiness to please” out of the first. There is no easy or natural way to account for these facts within structuralist assumptions.

Another set of syntactical facts that structuralist assumptions are inadequate to handle is the existence of certain types of ambiguous sentences where the ambiguity derives not from the words in the sentence but from the syntactical structure. Consider the sentence “The shooting of the hunters is terrible.” This can mean that it is terrible that the hunters are being shot or that the hunters are terrible at shooting or that the hunters are being shot in a terrible fashion. Another example is “I like her cooking.” In spite of the fact that it contains no ambiguous words (or morphemes) and has a very simple superficial grammatical structure of noun-verb-possessive pronoun-noun, this sentence is in fact remarkably ambiguous. It can mean, among other things, I like what she cooks, I like the way she cooks, I like the fact that she cooks, even, I like the fact that she is being cooked.

Such “syntactically ambiguous” sentences form a crucial test case for any theory of syntax. The examples are ordinary pedestrian English sentences, there is nothing fancy about them. But it is not easy to see how to account for them. The meaning of any sentence is determined by the meanings of the component words (or morphemes) and their syntactical arrangement. How then can we account for these cases where one sentence containing unambiguous words (and morphemes) has several different meanings? Structuralist linguists had little or nothing to say about these cases; they simply ignored them. Chomsky was eventually led to claim that these sentences have several different syntactical structures, that the uniform surface structure of, e.g., “I like her cooking” conceals several different underlying structures which he called “deep” structures. The introduction of the notion of the deep structure of sentences, not always visible in the surface structure, is a crucial element of the Chomsky revolution, and I shall explain it in more detail later.

One of the merits of Chomsky’s work has been that he has persistently tried to call attention to the puzzling character of facts that are so familiar that we all tend to take them for granted as not requiring explanation. Just as physics begins in wonder at such obvious facts as that apples fall to the ground or genetics in wonder that plants and animals reproduce themselves, so the study of the structure of language beings in wondering at such humdrum facts as that “I like her cooking” has different meanings, “John is eager to please” isn’t quite the same in structure as “John is easy to please,” and the equally obvious but often overlooked facts that we continually find ourselves saying and hearing things we have never said or heard before and that the number of possible new sentences is infinite.

Advertisement

The inability of structuralist methods to account for such syntactical facts eventually led Chomsky to challenge not only the methods but the goals and indeed the definition of the subject matter of linguistics given by the structuralist linguists. Instead of a taxonomic goal of classifying elements by performing sets of operations on a corpus of utterances, Chomsky argued that the goal of linguistic description should be to construct a theory that would account for the infinite number of sentences of a natural language. Such a theory would show which strings of words were sentences and which were not, and would provide a description of the grammatical structure of each sentence.

Such descriptions would have to be able to account for such facts as the internal grammatical relations and the ambiguities described above. The description of a natural language would be a formal deductive theory which would contain a set of grammatical rules that could generate the infinite set of sentences of the language, would not generate anything that was not a sentence, and would provide a description of the grammatical structure of each sentence. Such a theory came to be called a “generative grammar” because of its aim of constructing a device that would generate all and only the sentences of a language.

This conception of the goal of linguistics then altered the conception of the methods and the subject matter. Chomsky argued that since any language contains an infinite number of sentences, any “corpus,” even if it contained as many sentences as there are in all the books of the Library of Congress, would still be trivially small. Instead of the appropriate subject matter of linguistics being a randomly or arbitrarily selected set of sentences, the proper object of study was the speaker’s underlying knowledge of the language, his “linguistic competence” that enables him to produce and understand sentences he has never heard before.

Once the conception of the “corpus” as the subject matter is rejected, then the notion of mechanical procedures for discovering linguistic truths goes as well. Chomsky argues that no science has a mechanical procedure for discovering the truth anyway. Rather, what happens is that the scientist formulates hypotheses and tests them against evidence. Linguistics is no different: the linguist makes conjectures about linguistic facts and tests them against the evidence provided by native speakers of the language. He has in short a procedure for evaluating rival hypotheses, but no procedure for discovering true theories by mechanically processing evidence.

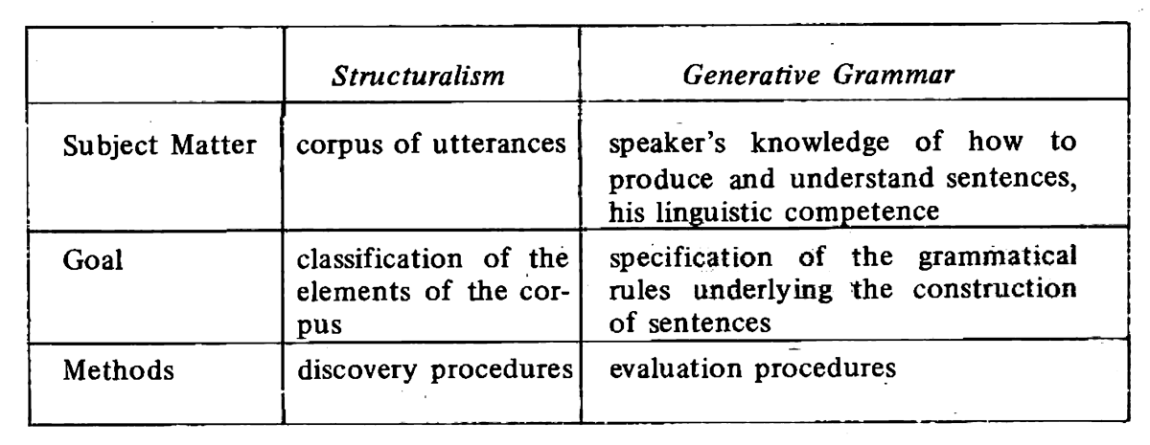

The Chomsky revolution can be summarized in the following chart:

Most of this revolution was already presented in Chomsky’s book Syntactic Structures. As one linguist remarked, “The extraordinary and traumatic impact of the publication of Syntactic Structures by Noam Chomsky in 1957 can hardly be appreciated by one who did not live through this upheaval.”2 In the years after 1957 the spread of the revolution was made more rapid and more traumatic by certain special features of the organization of linguistics as a discipline in the United States. Only a few universities had separate departments of linguistics. The discipline was (by contrast to, say, philosophy or psychology), and still is, a rather cozy one. Practitioners were few; they all tended to know one another; they read the same very limited number of journals; they had, and indeed still have, an annual get-together at the Summer Linguistics Institute of the Linguistic Society of America, where issues are thrashed out and family squabbles are aired in public meetings.

All of this facilitated a rapid dissemination of new ideas and a dramatic and visible clash of conflicting views. Chomsky did not convince the established leaders of the field but he did something more important, he convinced their graduate students. And he attracted some fiery disciples, notably Robert Lees and Paul Postal.

The spread of Chomsky’s revolution, like the spread of analytic philosophy during the same period, was a striking example of the Young Turk phenomenon in American academic life. The graduate students became generative grammarians even in departments that had traditionalist faculties. All of this also engendered a good deal of passion and animosity, much of which still survives. Many of the older generation still cling resentfully to the great traditions, regarding Chomsky and his “epigones” as philistines and vulgarians. Meanwhile Chomsky’s views have become the conventional wisdom, and as Chomsky and his disciples of the Sixties very quickly become Old Turks a new generation of Young Turks (many of them among Chomsky’s best students) arise and challenge Chomsky’s views with a new theory of “generative semantics.”

II

The aim of the linguistic theory expounded by Chomsky in Syntactic Structures (1957) was essentially to describe syntax, that is, to specify the grammatical rules underlying the construction of sentences. In Chomsky’s mature theory, as expounded in Aspects of the Theory of Syntax (1965), the aims become more ambitious: to explain all of the linguistic relationships between the sound system and the meaning system of the language. To achieve this, the complete “grammar” of a language, in Chomsky’s technical sense of the word, must have three parts, a syntactical component that generates and describes the internal structure of the infinite number of sentences of the language, a phonological component that describes the sound structure of the sentences generated by the syntactical component, and a semantic component that describes the meaning structure of the sentences. The heart of the grammar is the syntax; the phonology and the semantics are purely “interpretative,” in the sense that they describe the sound and the meaning of the sentences produced by the syntax but do not generate any sentences themselves.

The first task of Chomsky’s syntax is to account for the speaker’s understanding of the internal structure of sentences. Sentences are not unordered strings of words, rather the words and morphemes are grouped into functional constituents such as the subject of the sentence, the predicate, the direct object, and so on. Chomsky and other grammarians can represent much, though not all, of the speaker’s knowledge of the internal structure of sentences with rules called “phrase structure” rules.

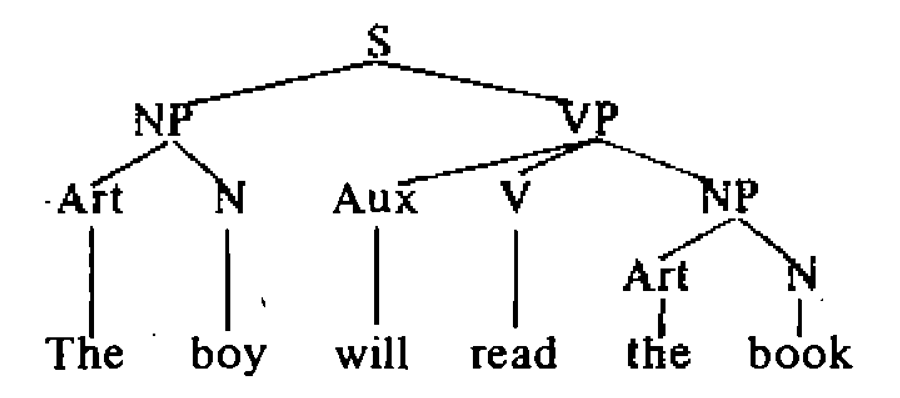

The rules themselves are simple enough to understand. For example, the fact that a sentence (S) can consist of a noun phrase (NP) followed by a verb phrase (VP) we can represent in a rule of the form: S → NP + VP. And for purposes of constructing a grammatical theory which will generate and describe the structure of sentences, we can read the arrow as an instruction to rewrite the left-hand symbol as the string of symbols on the right-hand side. The rewrite rules tell us that the initial symbol S can be replaced by NP + VP. Other rules will similarly unpack NP and VP into their constituents. Thus, in a very simple grammar, a noun phrase might consist of an article (Art) followed by a noun (N); and a verb phrase might consist of an auxiliary verb (Aux), a main verb (V), and a noun phrase (NP). A very simple grammar of a fragment of English, then, might look like this:

- S → NP + VP

- NP → Art + N

- VP → Aux + V + NP

- Aux → (can, may, will, must, etc.)

- V → (read, hit, eat, etc.)

- Art → (a, the)

- N → (boy, man, book, etc.)

If we introduce the initial symbol S into this system, then construing each arrow as the instruction to rewrite the left-hand symbol with the elements on the right (and where the elements are bracketed, to rewrite it as one of the elements), we can construct derivations of English sentences. If we keep applying the rules to generate strings until we have no elements in our strings that occur on the left-hand side of a rewrite rule, we have arrived at a “terminal string.” For example, starting with S and rewriting according to the rules mentioned above, we might construct the following simple derivation of the terminal string underlying the sentence “The boy will read the book”:

S

NP + VP (by rule 1)

Art + N + VP (by rule 2)

Art + N + Aux + V + NP (by rule 3)

Art + N + Aux + V + Art + N

(by rule 2)

the + boy + will + read + the + book

(by rules 4, 5, 6, and 7)

The information contained in this derivation can be represented graphically in a tree diagram of the following form:

This “phrase marker” is Chomsky’s representation of the syntax of the sentence “The boy will read the book.” It provides a description of the syntactical structure of the sentence. Phrase structure rules of the sort I have used to construct the derivation were implicit in at least some of the structuralist grammars; but Chomsky was the first to render them explicit and to show their role in the derivations of sentences. He is not, of course, claiming that a speaker actually goes consciously or unconsciously through any such process of applying rules of the form “rewrite X as Y” to construct sentences. To construe the grammarian’s description this way would be to confuse an account of competence with a theory of performance.

But Chomsky does claim that in some form or other the speaker has “internalized” rules of sentence construction, that he has “tacit” or “unconscious” knowledge of grammatical rules, and that the phrase structure rules constructed by the grammarian “represent” his competence. One of the chief difficulties of Chomsky’s theory is that no clear and precise answer has ever been given to the question of exactly how the grammarian’s account of the construction of sentences is supposed to represent the speaker’s ability to speak and understand sentences, and in precisely what sense of “know” the speaker is supposed to know the rules of the grammar.

Phrase structure rules were, as I have said, already implicit in at least some of the structuralist grammars Chomsky was attacking in Syntactic Structures. One of his earliest claims was that such rules, even in a rigorous and formalized deductive model such as we have just sketched, were not adequate to account for all the syntactical facts of natural languages. The entering wedge of his attack on structuralism was the claim that phrase structure rules alone could not account for the various sorts of cases such as “I like her cooking” and “John is eager to please.”

First, within such a grammar there is no natural way to describe the ambiguities in a sentence such as “I like her cooking.” Phrase structure rules alone would provide only one derivation for this sentence; but as the sentence is syntactically ambiguous, the grammar should reflect that fact by providing several different syntactical derivations and hence several different syntactical descriptions.

Secondly, phrase structure grammars have no way to picture the differences between “John is easy to please” and “John is eager to please.” Though the sentences are syntactically different, phrase structure rules alone would give them similar phrase markers.

Thirdly, just as in the above examples surface similarities conceal underlying differences that cannot be revealed by phrase structure grammar, so surface differences also conceal underlying similarities. For example, in spite of the different word order and the addition of certain elements, the sentence “The book will be read by the boy” and the sentence “The boy will read the book” have much in common: they both mean the same thing—the only difference is that one is in the passive mood and the other in the active mood. Phrase structure grammars alone give us no way to picture this similarity. They would give us two unrelated descriptions of these two sentences.

To account for such facts, Chomsky claims that in addition to phrase structure rules the grammar requires a second kind of rule, “transformational” rules, which transform phrase markers into other phrase markers by moving elements around, by adding elements, and by deleting elements. For example, by using Chomsky’s transformational rules, we can show the similarity of the passive to the active mood by showing how a phrase marker for the active mood can be converted into a phrase marker for the passive mood. Thus, instead of generating two unrelated phrase markers by phrase structure rules, we can construct a simpler grammar by showing how both the active and the passive can be derived from the same underlying phrase marker.

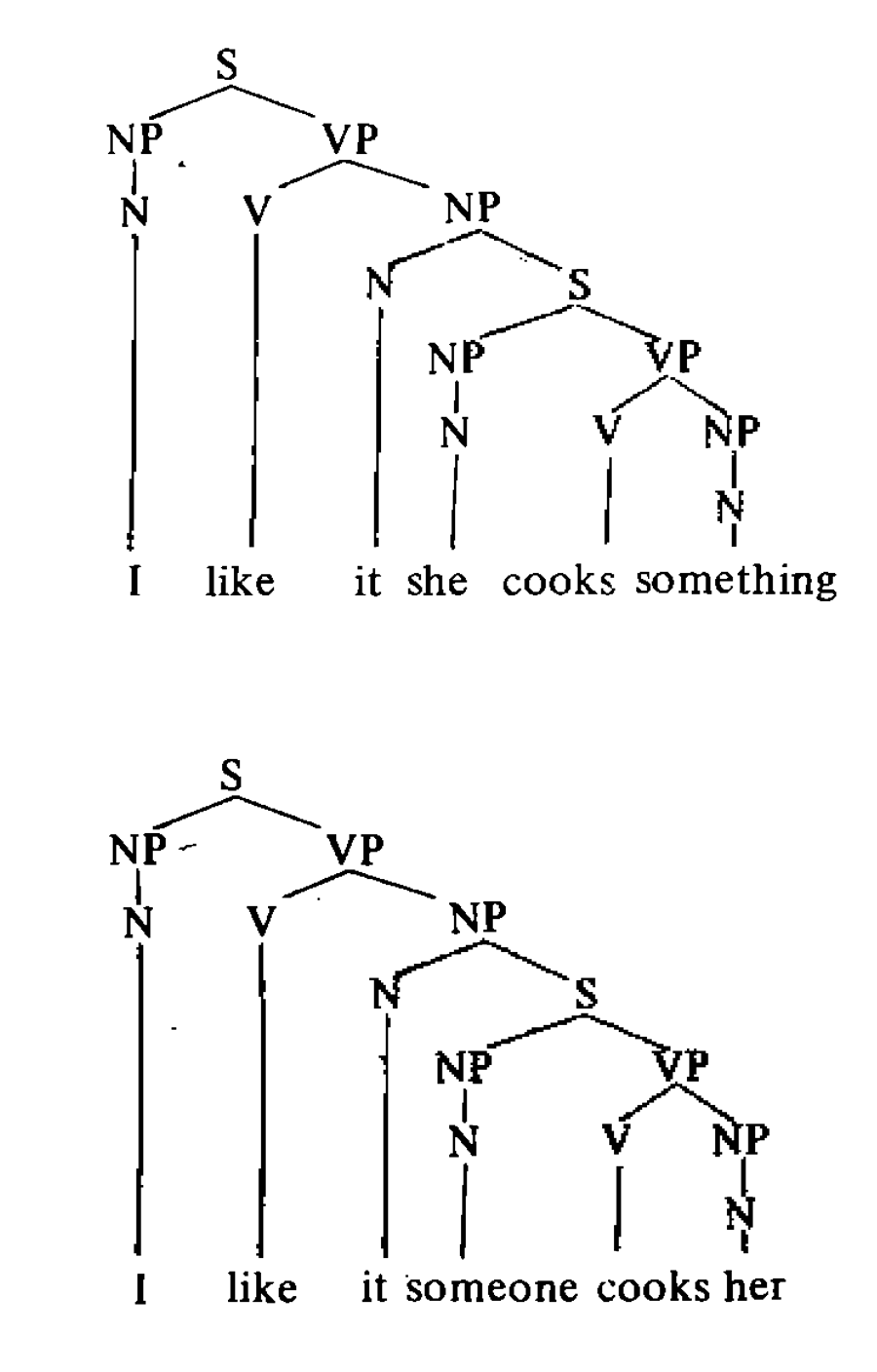

To account for sentences like “I like her cooking” we show that what we have is not just one phrase marker but several different underlying sentences each with a different meaning, and the phrase markers for these different sentences can all be transformed into one phrase marker for “I like her cooking.” Thus, underlying the one sentence “I like her cooking” are phrase markers for “I like what she cooks,” “I like the way she cooks,” “I like the fact that she cooks,” etc. For example, underlying the two meanings, “I like what she cooks” and “I like it that she is being cooked,” are the two phrase markers:3

Different transformational rules convert each of these into the same derived phrase marker for the sentence “I like her cooking.” Thus, the ambiguity in the sentence is represented in the grammar by phrase markers of several quite different sentences. Different phrase markers produced by the phrase structure rules are transformed into the same phrase marker by the application of the transformational rules.

Because of the introduction of transformational rules, grammars of Chomsky’s kind are often called “transformational generative grammars” or simply “transformational grammars.” Unlike phrase structure rules which apply to a single left-hand element in virtue of its shape, transformational rules apply to an element only in virtue of its position in a phrase marker: instead of rewriting one element as a string of elements, a transformational rule maps one phrase marker into another. Transformational rules therefore apply after the phrase structure rules have been applied; they operate on the output of the phrase structure rules of the grammar.

Corresponding to the phrase structure rules and the transformational rules respectively are two components to the syntax of the language, a base component and a transformational component. The base component of Chomsky’s grammar contains the phrase structure rules, and these (together with certain rules restricting which combinations of words are permissible so that we do not get nonsense sequences like “The book will read the boy”) determine the deep structure of each sentence. The transformational component converts the deep structure of the sentence into its surface structure. In the example we just considered, “The book will be boy” and the sentence “The boy will read the book,” two surface structures are derived from one deep structure. In the case of “I like her cooking,” one surface structure is derived from several different deep structures.

At the time of the publication of Aspects of the Theory of Syntax it seemed that all of the semantically relevant parts of the sentence, all the things that determine its meaning, were contained in the deep structure of the sentence. The examples we mentioned above fit in nicely with this view. “I like her cooking” has different meanings because it has different deep structures though only one surface structure; “The boy will read the book” and “The book will be read by the boy” have different surface structures, but one and the same deep structure, hence they have the same meaning.

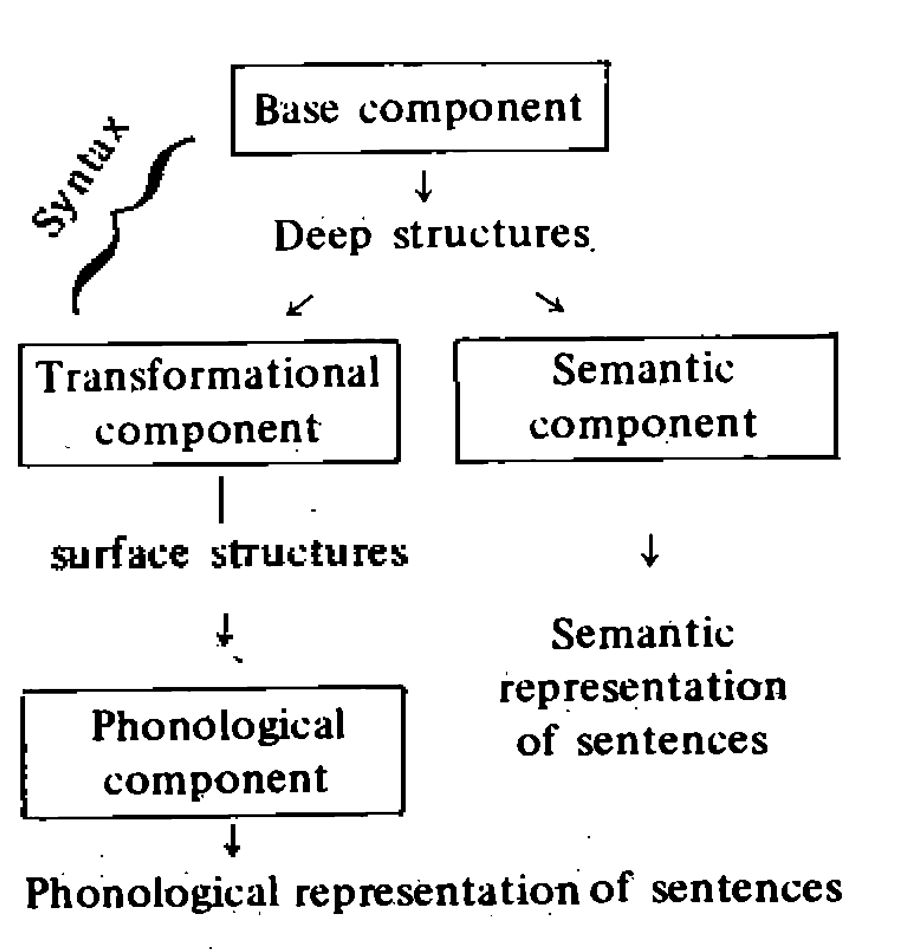

This produced a rather elegant theory of the relation of syntax to semantics and phonology: the two components of the syntax, the base component and the transformational component, generate deep structures and surface structures respectively. Deep structures are the input to the semantic component, which describes their meaning. Surface structures are the input to the phonological component, which describes their sound. In short, deep structure determines meaning, surface structure determines sound. Graphically the theory of a language was supposed to look like this:

The task of the grammarian is to state the rules that are in each of the little boxes. These rules are supposed to represent the speaker’s competence. In knowing how to produce and understand sentences, the speaker, in some sense, is supposed to know or to have “internalized” or have an “internal representation of” these rules.

The elegance of this picture has been marred in recent years, partly by Chomsky himself, who now concedes that surface structures determine at least part of meaning, and more radically by the younger Turks, the generative semanticists, who insist that there is no boundary between syntax and semantics and hence no such entities as syntactic deep structures.

III

Seen as an attack on the methods and assumptions of structural linguistics, Chomsky’s revolution appears to many of his students to be not quite revolutionary enough. Chomsky inherits and maintains from his structuralist upbringing the conviction that syntax can and should be studied independently of semantics; that form is to be characterized independently of meaning. As early as Syntactic Structures he was arguing that “investigation of such [semantic] proposals invariably leads to the conclusion that only a purely formal basis can provide a firm and productive foundation for the construction of grammatical theory.”4

The structuralists feared the intrusion of semantics into syntax because meaning seemed too vaporous and unscientific a notion for use in a rigorous science of language. Some of this attitude appears to survive in Chomsky’s persistent preference for syntactical over semantic explanations of linguistic phenomena. But, I believe, the desire to keep syntax autonomous springs from a more profound philosophical commitment: man, for Chomsky, is essentially a syntactical animal. The structure of his brain determines the structure of his syntax, and for this reason the study of syntax is one of the keys, perhaps the most important key, to the study of the human mind.

It is of course true, Chomsky would say, that men use their syntactical objects for semantic purposes (that is, they talk with their sentences), but the semantic purposes do not determine the form of the syntax or even influence it in any significant way. It is because form is only incidentally related to function that the study of language as a formal system is such a marvelous way of studying the human mind.

It is important to emphasize how peculiar and eccentric Chomsky’s overall approach to language is. Most sympathetic commentators have been so dazzled by the results in syntax that they have not noted how much of the theory runs counter to quite ordinary, plausible, and common-sense assumptions about language. The commonsense picture of human language runs something like this. The purpose of language is communication in much the same sense that the purpose of the heart is to pump blood. In both cases it is possible to study the structure independently of function but pointless and perverse to do so, since structure and function so obviously interact. We communicate primarily with other people, but also with ourselves, as when we talk or think in words to ourselves. Human languages are among several systems of human communication (some others are gestures, symbol systems, and representational art) but language has immeasurably greater communicative power than the others.

We don’t know how language evolved in human prehistory, but it is quite reasonable to suppose that the needs of communication influenced the structure. For example, transformational rules facilitate economy and so have survival value: we don’t have to say, “I like it that she cooks in a certain way,” we can say, simply, “I like her cooking.” We pay a small price for such economies in having ambiguities, but it does not hamper communication much to have ambiguous sentences because when people actually talk the context usually sorts out the ambiguities. Transformations also facilitate communication by enabling us to emphasize certain things at the expense of others: we can say not only “Bill loves Sally” but also “It is Bill that loves Sally” and “It is Sally that Bill loves.” In general an understanding of syntactical facts requires an understanding of their function in communication since communication is what language is all about.

Chomsky’s picture, on the other hand, seems to be something like this: except for having such general purposes as the expression of human thoughts, language doesn’t have any essential purpose, or if it does there is no interesting connection between its purpose and its structure. The syntactical structures of human languages are the products of innate features of the human mind, and they have no significant connection with communication, though, of course, people do use them for, among other purposes, communication. The essential thing about languages, their defining trait, is their structure. The so-called “bee language,” for example, is not a language at all because it doesn’t have the right structure, and the fact that bees apparently use it to communicate is irrelevant. If human beings evolved to the point where they used syntactical forms to communicate that are quite unlike the forms we have now and would be beyond our present comprehension, then human beings would no longer have language, but something else.

For Chomsky language is defined by syntactical structure (not by the use of the structure in communication) and syntactical structure is determined by innate properties of the human mind (not by the needs of communication). On this picture of language it is not surprising that Chomsky’s main contribution has been to syntax. The semantic results that he and his colleagues have achieved have so far been trivial.

Many of Chomsky’s best students find this picture of language implausible and the linguistic theory that emerges from it unnecessarily cumbersome. They argue that one of the crucial factors shaping syntactic structure is semantics. Even such notions as “a grammatically correct sentence” or a “well-formed” sentence, they claim, require the introduction of semantic concepts. For example, the sentence “John called Mary a Republican and then SHE insulted HIM” 5 is a wellformed sentence only on the assumption that the participants regard it as insulting to be called a Republican.

Much as Chomsky once argued that structuralists could not comfortably accommodate the syntactical facts of language, so the generative semanticists now argue that his system cannot comfortably account for the facts of the interpenetration of semantics and syntax. There is no unanimity among Chomsky’s critics—Ross, Postal, Lakoff, McCawley, Fillmore (some of these are among his best students)—but they generally agree that syntax and semantics cannot be sharply separated, and hence there is no need to postulate the existence of purely syntactical deep structures.

Those who call themselves generative semanticists believe that the generative component of a linguistic theory is not the syntax, as in the above diagrams, but the semantics, that the grammar starts with a description of the meaning of a sentence and then generates the syntactical structures through the introduction of syntactical rules and lexical rules. The syntax then becomes just a collection of rules for expressing meaning.

It is too early to assess the conflict between Chomsky’s generative syntax and the new theory of generative semantics, partly because at present the arguments are so confused. Chomsky himself thinks that there is no substance to the issues because his critics have only rephrased his theory in a new terminology.6

But it is clear that a great deal of Chomsky’s over-all vision of language hangs on the issue of whether there is such a thing as syntactical deep structure. Chomsky argues that if there were no deep structure, linguistics as a study would be much less interesting because one could not then argue from syntax to the structure of the human mind, which for Chomsky is the chief interest of linguistics. I believe on the contrary that if the generative semanticists are right (and it is by no means clear that they are) that there is no boundary between syntax and semantics and hence no syntactical deep structures, linguistics if anything would be even more interesting because we could then begin the systematic investigation of the way form and function interact, how use and structure influence each other, instead of arbitrarily assuming that they do not, as Chomsky has so often tended to assume.

It is one of the ironies of the Chomsky revolution that the author of the revolution now occupies a minority position in the movement he created. Most of the active people in generative grammar regard Chomsky’s position as having been rendered obsolete by the various arguments concerning the inter-action between syntax and semantics. The old time structuralists whom Chomsky originally attacked look on with delight at this revolution within the revolution, rubbing their hands in glee at the sight of their adversaries fighting each other. “Those TG [transformational grammar] people are in deep trouble,” one warhorse of the old school told me. But the traditionalists are mistaken to regard the fight as support for their position. The conflict is being carried on entirely within a conceptual system that Chomsky created. Whoever wins, the old structuralism will be the loser.

IV

The most spectacular conclusion about the nature of the human mind that Chomsky derives from his work in linguistics is that his results vindicate the claims of the seventeenth-century rationalist philosophers, Descartes, Leibniz, and others, that there are innate ideas in the mind. The rationalists claim that human beings have knowledge that is not derived from experience but is prior to all experience and determines the form of the knowledge that can be gained from experience. The empiricist tradition by contrast, from Locke down to contemporary behaviorist learning theorists, has tended to treat the mind as a tabula rasa, containing no knowledge prior to experience and placing no constraints on the forms of possible knowledge, except that they must be derived from experience by such mechanisms as the association of ideas or the habitual connection of stimulus and response. For empiricists all knowledge comes from experience, for rationalists some knowledge is implanted innately and prior to experience. In his bluntest moods, Chomsky claims to have refuted the empiricists and vindicated the rationalists.

His argument centers around the way in which children learn language. Suppose we assume that the account of the structure of natural languages we gave in Section II is correct. Then the grammar of a natural language will consist of a set of phrase structure rules that generate underlying phrase markers, a set of transformational rules that map deep structures onto surface structures, a set of phonological rules that assign phonetic interpretations to surface structures, and so on. Now, asks Chomsky, if all of this is part of the child’s linguistic competence, how does he ever acquire it? That is, in learning how to talk, how does the child acquire that part of knowing how to talk which is described by the grammar and which constitutes his linguistic competence?

Notice, Chomsky says, several features of the learning situation: The information that the child is presented with—when other people address him or when he hears them talk to each other—is limited in amount, fragmentary, and imperfect. There seems to be no way the child could learn the language just by generalizing from his inadequate experiences, from the utterances he hears. Furthermore, the child acquires the language at a very early age, before his general intellectual faculties are developed.

Indeed, the ability to learn a language is only marginally dependent on intelligence and motivation—stupid children and intelligent children, motivated and unmotivated children, all learn to speak their native tongue. If a child does not acquire his first language by puberty, it is difficult, and perhaps impossible, for him to learn one after that time. Formal teaching of the first language is unnecessary: the child may have to go to school to learn to read and write but he does not have to go to school to learn how to talk.

Now, in spite of all these facts the child who learns his first language, claims Chomsky, performs a remarkable intellectual feat: in “internalizing” the grammar he does something akin to constructing a theory of the language. The only explanation for all these facts, says Chomsky, is that the mind is not a tabula rasa, but rather, the child has the form of the language already built into his mind before he ever learns to talk. The child has a universal grammar, so to speak, programmed into his brain as part of his genetic inheritance. In the most ambitious versions of this theory, Chomsky speaks of the child as being born “with a perfect knowledge of universal grammar, that is, with a fixed schematism that he uses,…in acquiring language.”7 A child can learn any human language on the basis of very imperfect information. That being the case, he must have the forms that are common to all human languages as part of his innate mental equipment.

As further evidence in support of a specifically human “faculté de langage” Chomsky points out that animal communication systems are radically unlike human languages. Animal systems have only a finite number of communicative devices, and they are usually controlled by certain stimuli. Human languages by contrast, all have an infinite generative capacity and the utterances of sentences are not predictable on the basis of external stimuli. This “creative aspect of language use” is peculiarly human.

One traditional argument against the existence of an innate language learning faculty is that human languages are so diverse. The differences between Chinese, Nootka, Hungarian, and English, for example, are so great as to destroy the possibility of any universal grammar, and hence languages could only be learned by a general intelligence, not by any innate language learning device. Chomsky has attempted to turn this argument on its head: In spite of surface differences, all human languages have very similar underlying structures; they all have phrase structure rules and transformational rules. They all contain sentences, and these sentences are composed of subject noun phrases and predicate verb phrases, etc.

Chomsky is really making two claims here. First, a historical claim that his views on language were prefigured by the seventeenth-century rationalists, especially Descartes. Second, a theoretical claim that empiricist learning theory cannot account for the acquisition of language. Both claims are more tenuous than he suggests. Descartes did indeed claim that we have innate ideas, such as the idea of a triangle or the idea of perfection or the idea of God. But I know of no passage in Descartes to suggest that he thought the syntax of natural languages was innate. Quite the contrary, Descartes appears to have thought that language was arbitrary; he thought that we arbitrarily attach words to our ideas. Concepts for Descartes are innate, whereas language is arbitrary and acquired. Furthermore Descartes does not allow for the possibility of unconscious knowledge, a notion that is crucial to Chomsky’s system. Chomsky cites correctly Descartes’s claim that the creative use of language distinguishes man from the lower animals. But that by itself does not support the thesis that Descartes is a precursor of Chomsky’s theory of innate ideas.

The positions are in fact crucially different. Descartes thought of man as essentially a language-using animal who arbitrarily assigns verbal labels to an innate system of concepts. Chomsky, as remarked earlier, thinks of man as essentially a syntactical animal producing and understanding sentences by virtue of possessing an innate system of grammar, triggered in various possible forms by the different human languages to which he has been exposed. A better historical analogy than with Descartes is with Leibniz, who claimed that innate ideas are in us in the way that the statue is already prefigured in a block of marble. In a passage of Leibniz Chomsky frequently quotes, Leibniz makes

…the comparison of a block of marble which has veins, rather than a block of marble wholly even, or of blank tablets, i.e., of what is called among philosophers, a tabula rasa. For if the soul resembles these blank tablets, truth would be in us as the figure of Hercules is in the marble, when the marble is wholly indifferent to the reception of this figure or some other. But if there were veins in the block which would indicate the figure of Hercules rather than other figures, this block would be more determined thereto, and Hercules would be in it as in some sense innate, although it would be needful to labor to discover these veins, to clear them by polishing, and by cutting away what prevents them from appearing. Thus, it is that ideas and truths are for us innate, as inclinations, dispositions, habits, or natural potentialities, and not as actions, although these potentialities are always accompanied by some actions, often insensible, which correspond to them.8

But if the correct model for the notion of innate ideas is the block of marble that contains the figure of Hercules as “disposition,” “inclination,” or “natural potentiality,” then at least some of the dispute between Chomsky and the empiricist learning theorists will dissolve like so much mist on a hot morning. Many of the fiercest partisans of empiricist and behaviorist learning theories are willing to concede that the child has innate learning capacities in the sense that he has innate dispositions, inclinations, and natural potentialities. Just as the block of marble has the innate capacity of being turned into a statue, so the child has the innate capacity of learning. W. V. Quine, for example, in his response to Chomsky’s innateness hypothesis argues, “The behaviorist is knowingly and cheerfully up to his neck in innate mechanisms of learning readiness.” Indeed, claims Quine, “Innate biases and dispositions are the cornerstone of behaviorism.”9

If innateness is the cornerstone of behaviorism what then is left of the dispute? Even after all these ecumenical disclaimers by behaviorists to the effect that of course behaviorism and empiricism require innate mechanisms to make the stimulus-response patterns work, there still remains a hard core of genuine disagreement. Chomsky is arguing not simply that the child must have “learning readiness,” “biases,” and “dispositions,” but that he must have a specific set of linguistic mechanisms at work. Claims by behaviorists that general learning strategies are based on mechanisms of feedback, information processing, analogy, and so on are not going to be enough. One has to postulate an innate faculty of language in order to account for the fact that the child comes up with the right grammar on the basis of his exposure to the language.

The heart of Chomsky’s argument is that the syntactical core of any language is so complicated and so specific in its form, so unlike other kinds of knowledge, that no child could learn it unless he already had the form of the grammar programmed into his brain, unless, that is, he had “perfect knowledge of a universal grammar.” Since there is at the present state of neurophysiology no way to test such a hypothesis by inspection of the brain, the evidence for the conclusion rests entirely on the facts of the grammar. In order to meet the argument, the anti-Chomskyan would have to propose a simpler grammar that would account for the child’s ability to learn a language and for linguistic competence in general. No defender of traditional learning theory has so far done this (though the generative grammarians do claim that their account of competence is much simpler than the diagram we drew in Section II above).

The behaviorist and empiricist learning theorist who concedes the complexity of grammar is faced with a dilemma: either he relies solely on stimulus-response mechanisms, in which case he cannot account for the acquisition of the grammar, or he concedes, à la Quine, that there are innate mechanisms which enable the child to learn the language. But as soon as the mechanisms are rich enough to account for the complexity and specificity of the grammar, then the stimulus-response part of the theory, which was supposed to be its core, becomes uninteresting; for such interest as it still has now derives entirely from its ability to trigger the innate mechanisms that are now the crucial element of the learning theory. Either way, the behaviorist has no effective reply to Chomsky’s arguments.

V

The weakest element of Chomsky’s grammar is the semantic component, as he himself repeatedly admits.10 But while he believes that the semantic component suffers from various minor technical limitations, I think that it is radically inadequate; that the theory of meaning it contains is too impoverished to enable the grammar to achieve its objective of explaining all the linguistic relationships between sound and meaning.

Most, though not all, of the diverse theories of meaning advanced in the past several centuries from Locke to Chomsky and Quine are guilty of exactly the same fallacy. The fallacy can be put in the form of a dilemma for the theory: either the analysis of meaning itself contains certain of the crucial elements of the notion to be analyzed, in which case the analysis fails because of circularity; or the analysis reduces the thing to be analyzed into simpler elements which lack its crucial features, in which case the analysis fails because of inadequacy.

Before we apply this dilemma to Chomsky let us see how it works for a simple theory of meaning such as is found in the classical empirical philosophers, Locke, Berkeley, and Hume. These great British empiricists all thought that words got their meaning by standing for ideas in the mind. A sentence like “The flower is red” gets its meaning from the fact that anyone who understands the sentence will conjoin in his mind an idea of a flower with an idea of redness. Historically there were various arguments about the details of the theory (e.g., were the ideas for which general words stood themselves general ideas or were they particular ideas that were made “general in their representation”?). But the broad outlines of the theory were accepted by all. To understand a sentence is to associate ideas in the mind with the descriptive terms in the sentence.

But immediately the theory is faced with a difficulty. What makes the ideas in the mind into a judgment? What makes the sequence of images into a representation of the speech act of stating that the flower is red? According to the theory, first I have an idea of a flower, then I have an idea of redness. So far the sequence is just a sequence of unconnected images and does not amount to the judgment that the flower is red, which is what is expressed in the sentence. I can assume that the ideas come to someone who understands the sentence in the form of a judgment, that they just are somehow connected as representing the speech act of stating that the flower is red—in which case we have the first horn of our dilemma and the theory is circular, since it employs some of the crucial elements of the notion of meaning in the effort to explain meaning. Or on the other hand if I do not assume the ideas come in the form of a judgment then I have only a sequence of images in my mind and not the crucial feature of the original sentence, namely, the fact that the sentence says that the flower is red—in which case we have the second horn of our dilemma and the analysis fails because it is inadequate to account for the meaning of the sentence.

The semantic theory of Chomsky’s generative grammar commits exactly the same fallacy. To show this I will first give a sketch of what the theory is supposed to do. Just as the syntactical component of the grammar is supposed to describe the speaker’s syntactical competence (his knowledge of the structure of sentences) and the phonological component is supposed to describe his phonological competence (his knowledge of how the sentences of his language sound), so the semantic component is supposed to describe the speaker’s semantic competence (his knowledge of what the sentences mean and how they mean what they mean).

The semantic component of a grammar of a language embodies the semantic theory of that language. It consists of the set of rules that determine the meanings of the sentences of the language. It operates on the assumption, surely a correct one, that the meaning of any sentence is determined by the meaning of all the meaningful elements of the sentence and by their syntactical combination. Since these elements and their arrangement are represented in the deep structure of the sentence, the “input” to the semantic component of the grammar will consist of deep structures of sentences as generated by the syntactic component, in the way we described in Section II.

The “output” is a set of “readings” for each sentence, where the readings are supposed to be a “semantic representation” of the sentence; that is, they are supposed to be descriptions of the meanings of the sentence. If for example a sentence has three different meanings the semantic component will duplicate the speaker’s competence by producing three different readings. If the sentence is nonsense the semantic component will produce no readings. If two sentences mean the same thing, it will produce the same reading for both sentences. If a sentence is “analytic,” that is, if it is true by definition because the meaning of the predicate is contained in the meaning of the subject (for example, “All bachelors are unmarried” is analytic because the meaning of the subject “bachelor” contains the meaning of the predicate “unmarried”), the semantic component will produce a reading for the sentence in which the reading of the predicate is contained in the reading of the subject.

Chomsky’s grammarian in constructing a semantic component tries to construct a set of rules that will provide a model of the speaker’s semantic competence. The model must duplicate the speaker’s understanding of ambiguity, synonymy, nonsense, analyticity, self-contradiction, and so on. Thus, for example, consider the ambiguous sentence “I went to the bank.” As part of his competence the speaker of English knows that the sentence is ambiguous because the word “bank” has at least two different meanings. The sentence can mean either I went to the finance house or I went to the side of the river. The aim of the grammarian is to describe this kind of competence; he describes it by constructing a model, a set of rules, that will duplicate it. His semantic theory must produce two readings for this sentence.

If, on the other hand, the sentence is “I went to the bank and deposited some money in my account” the semantic component will produce only one reading because the portion of the sentence about depositing money determines that the other meaning of bank—namely, side of the river—is excluded as a possible meaning in this sentence. The semantic component then will have to contain a set of rules describing which kinds of combinations of words make which kind of sense, and this is supposed to account for the speaker’s knowledge of which kinds of combinations of words in his language make which kind of sense.

All of this can be, and indeed has been; worked up into a very elaborate formal theory by Chomsky and his followers; but when we have constructed a description of what the semantic component is supposed to look like, a nagging question remains: What exactly are these “readings”? What is the string of symbols that comes out of the semantic component supposed to represent or express in such a way as to constitute a description of the meaning of a sentence?

The same dilemma with which we confronted Locke applies here: either the readings are just paraphrases, in which case the analysis is circular, or the readings consist only of lists of elements, in which case the analysis fails because of inadequacy; it cannot account for the fact that the sentence expresses a statement. Consider each horn of the dilemma. In the example above when giving two different readings for “I went to the bank” I gave two English paraphrases, but that possibility is not open to a semantic theory which seeks to explain competence in English, since the ability to understand paraphrases presupposes the very competence the semantic theory is seeking to explain. I cannot explain general competence in English by translating English sentences into other English sentences. In the literature of the Chomskyan semantic theorists, the examples given of “readings” are usually rather bad paraphrases of English sentences together with some jargon about “semantic markers” and “distinguishers” and so on.11 We are assured that the paraphrases are only for illustrative purposes, that they are not the real readings.

But what can the real readings be? The purely formal constraints placed on the semantic theory are not much help in telling us what the readings are. They tell us only that a sentence that is ambiguous in three ways must have three readings, a nonsense sentence no readings, two synonymous sentences must have the same readings, and so on. But so far as these requirements go, the readings need not be composed of words but could be composed of any formally specifiable set of objects. They could be numerals, piles of stones, old cars, strings of symbols, anything whatever. Suppose we decide to interpret the readings as piles of stones. Then for a three-ways ambiguous sentence the theory will give us three piles of stones, for a nonsense sentence, no piles of stones, for an analytic sentence the arrangement of stones in the predicate pile will be duplicated in the subject pile, and so on. There is nothing in the formal properties of the semantic component to prevent us from interpreting it in this way. But clearly this will not do because now instead of explaining the relationships between sound and meaning the theory has produced an unexplained relationship between sounds and stones.

When confronted with this objection the semantic theorists always make the same reply. Though we cannot produce adequate readings at present, ultimately the readings will be expressed in a yet to be discovered universal semantic alphabet. The elements in the alphabet will stand for the meaning units in all languages in much the way that the universal phonetic alphabet now represents the sound units in all languages. But would a universal semantic alphabet escape the dilemma? I think not.

Either the alphabet is a kind of a new artificial language, a new Esperanto, and the readings are once again paraphrases, only this time in the Esperanto and not in the original language; or we have the second horn of the dilemma and the readings in the semantic alphabet are just a list of features of language, and the analysis is inadequate because it substitutes a list of elements for a speech act.

The semantic theory of Chomsky’s grammar does indeed give us a useful and interesting adjunct to the theory of semantic competence, since it gives us a model that duplicates the speaker’s competence in recognizing ambiguity, synonymy, nonsense, etc. But as soon as we ask what exactly the speaker is recognizing when he recognizes one of these semantic properties, or as soon as we try to take the semantheory as a general account of semantic competence, it cannot cope with the dilemma. Either it gives us a sterile formalism, an uninterpreted list of elements, or it gives us paraphrases, which explain nothing.

Various philosophers working on an account of meaning in the past generation12 have provided us with a way out of this dilemma. But to accept the solution would involve enriching the semantic theory in ways not so far contemplated by Chomsky or the other Cambridge grammarians. Chomsky characterizes the speaker’s linguistic competence as his ability to “produce and understand” sentences. But this is at best very misleading: a person’s knowledge of the meaning of sentences consists in large part in his knowledge of how to use sentences to make statements, ask questions, give orders, make requests, make promises, warnings, etc., and to understand other people when they use sentences for such purposes. Semantic competence is in large part the ability to perform and understand what philosophers and linguists call speech acts.

Now if we approach the study of semantic competence from the point of view of the ability to use sentences to perform speech acts, we discover that speech acts have two properties, the combination of which will get us out of the dilemma: they are governed by rules and they are intentional. The speaker who utters a sentence and means it literally utters it in accordance with certain semantic rules and with the intention of invoking those rules to render his utterance the performance of a certain speech act.

This is not the place to recapitulate the whole theory of meaning and speech acts,13 but the basic idea is this. Saying something and meaning it is essentially a matter of saying it with the intention to produce certain effects on the hearer. And these effects are determined by the rules that attach to the sentence that is uttered. Thus, for example, the speaker who knows the meaning of the sentence “The flower is red” knows that its utterance constitutes the making of a statement. But making a statement to the effect that the flower is red consists in performing an action with the intention of producing in the hearer the belief that the speaker is committed to the existence of a certain state of affairs, as determined by the semantic rules attaching to the sentence.

Semantic competence is largely a matter of knowing the relationships between semantic intentions, rules, and conditions specified by the rules. Such an analysis of competence may in the end prove incorrect, but it is not open to the obvious dilemmas I have posed to classical empiricist and Chomskyan semantic theorists. It is not reduced to providing us with paraphrase or a list of elements. The glue that holds the elements together into a speech act is the semantic intentions of the speaker.

The defect of the Chomskyan theory arises from the same weakness we noted earlier, the failure to see the essential connection between language and communication, between meaning and speech acts. The picture that underlies the semantic theory and indeed Chomsky’s whole theory of language is that sentences are abstract objects that are produced and understood independently of their role in communication. Indeed, Chomsky sometimes writes as if sentences were only incidentally used to talk with.14 I am claiming that any attempt to account for the meaning of sentences within such assumptions is either circular or inadequate.

The dilemma is not just an argumentative trick, it reveals a more profound inadequacy. Any attempt to account for the meaning of sentences must take into account their role in communication, in the performance of speech acts, because an essential part of the meaning of any sentence is its potential for being used to perform a speech act. There are two radically different conceptions of language in conflict here: one, Chomsky’s, sees language as a self-contained formal system used more or less incidentally for communication. The other sees language as essentially a system for communication.

The limitations of Chomsky’s assumptions become clear only when we attempt to account for the meaning of sentences within his system, because there is no way to account for the meaning of a sentence without considering its role in communication, since the two are essentially connected. So long as we confine our research to syntax, where in fact most of Chomsky’s work has been done, it is possible to conceal the limitations of the approach, because syntax can be studied as a formal system independently of its use, just as we could study the currency and credit system of an economy as an abstract formal system independently of the fact that people use money to buy things with or we could study the rules of baseball as a formal system independently of the fact that baseball is a game people play. But as soon as we attempt to account for meaning, for semantic competence, such a purely formalistic approach breaks down, because it cannot account for the fact that semantic competence is mostly a matter of knowing how to talk, i.e., how to perform speech acts.

The Chomsky revolution is largely a revolution in the study of syntax. The obvious next step in the development of the study of language is to graft the study of syntax onto the study of speech acts. And this is indeed happening, though Chomsky continues to fight a rearguard action against it, or at least against the version of it that the generative semanticists who are building on his own work now present.

There are, I believe, several reasons why Chomsky is reluctant to incorporate a theory of speech acts into his grammar: First, he has a mistaken conception of the distinction between performance and competence. He seems to think that a theory of speech acts must be a theory of performance rather than of competence, because he fails to see that competence is ultimately the competence to perform, and that for this reason a study of the linguistic aspects of the ability to perform speech acts is a study of linguistic competence. Secondly, Chomsky seems to have a residual suspicion that any theory that treats the speech act, a piece of speech behavior, as the basic unit of meaning must involve some kind of a retreat to behaviorism. Nothing could be further from the truth. It is one of the ironies of the history of behaviorism that behaviorists should have failed to see that the notion of a human action must be a “mentalistic” and “introspective” notion since it essentially involves the notion of human intentions.

The study of speech acts is indeed the study of a certain kind of human behavior, but for that reason it is in conflict with any form of behaviorism, which is conceptually incapable of studying human behavior. But the third, and most important reason, I believe, is Chomsky’s only partly articulated belief that language does not have any essential connection with communication, but is an abstract formal system produced by the innate properties of the human mind.

Chomsky’s work is one of the most remarkable intellectual achievements of the present era, comparable in scope and coherence to the work of Keynes or Freud. It has done more than simply produce a revolution in linguistics; it has created a new discipline of generative grammar and is having a revolutionary effect on two other subjects, philosophy and psychology. Not the least of its merits is that it provides an extremely powerful tool even for those who disagree with many features of Chomsky’s approach to language. In the long run, I believe his greatest contribution will be that he has taken a major step toward restoring the traditional conception of the dignity and uniqueness of man.

This Issue

June 29, 1972

-

1

Quoted in R. H. Robins, A Short History of Linguistics (Indiana University Press, 1967), p. 239.

↩ -

2

Howard Maclay, “Overview,” in D. Steinberg and L. Jacobovitz, eds., Semantics (Cambridge University Press, 1971), p. 163.

↩ -

3

Not all grammarians would agree that these are exactly the right phrase markers for these two meanings. My point here is only to illustrate how different phrase markers can represent different meanings.

↩ -

4

Noam Chomsky, Syntactic Structures (Mouton & Co., 1957), p. 100.

↩ -

5

As distinct from “John called Mary beautiful and then she INSULTED him.”

↩ -

6

Cf., e.g., Noam Chomsky, “Deep Structure, Surface Structure, and Semantic Interpretation,” in D. Steinberg and L. Jacobovitz, eds., Semantics (Cambridge University Press, 1971).

↩ -

7

Noam Chomsky, “Linguistics and Philosophy,” in S. Hook, ed., Language and Philosophy (NYU Press, 1969), p. 88.

↩ -

8

G. Leibniz, New Essays Concerning Human Understanding (Open Court, 1949), pp. 45-46.

↩ -

9

W. V. O. Quine, “Linguistics and Philosophy,” in S. Hook, ed., Language and Philosophy (NYU Press, 1969), pp. 95-96.

↩ -

10

I am a little reluctant to attribute the semantic component to Chomsky, since most of its features were worked out not by him but by his colleagues at MIT; nonetheless since he incorporates it entirely as part of his grammar I shall assess it as such.

↩ -

11

For example, one of the readings given for the sentence “The man hits the colorful ball” contains the elements: [Some contextually definite] (Physical object) (Human) (Adult) (Male) (Action) (Instancy) (Intensity) [Collides with an impact] [Some contextually definite] (Physical object) (Color) [[Abounding in contrast or variety of bright colors] [Having a globular shape]]. J. Katz and J. Fodor, “The Structure of a Semantic Theory,” in The Structure of Language, J. Katz and J. Fodor, eds., (Prentice-Hall, 1964), p. 513.

↩ -

12

In, e.g., L. Wittgenstein, Philosophical Investigations (Macmillan, 1953); J. L. Austin, How to Do Things with Words (Harvard, 1962); P. Grice, “Meaning,” in Philosophical Review, 1957; J. R. Searle, Speech Acts, An Essay in the Philosophy of Language (Cambridge University Press, 1969); and P. F. Strawson, Logico-Linguistic Papers (Methuen, 1971).

↩ -

13

For an attempt to work out some of the details, see J. R. Searle, Speech Acts, An Essay in the Philosophy of Language (Cambridge University Press, 1969), Chapters 1-3.

↩ -

14

E.g., meaning, he writes, “need not involve communication or even the attempt to communicate,” Problems of Knowledge and Freedom (Pantheon Books, 1971), p. 19.

↩