In mid-October, a cybersecurity researcher in the Netherlands demonstrated, online, as a warning,* the easy availability of the Internet protocol address and open, unsecured access points of the industrial control system—the ICS—of a wastewater treatment plant not far from my home in Vermont. Industrial control systems may sound inconsequential, but as the investigative journalist Andy Greenberg illustrates persuasively in Sandworm: A New Era of Cyberwar and the Hunt for the Kremlin’s Most Dangerous Hackers, they have become the preferred target of malicious actors aiming to undermine civil society. A wastewater plant, for example, removes contaminants from the water supply; if its controls were to be compromised, public health would be, too.

That Vermont water treatment plant’s industrial control system is just one of 26,000 ICS’s across the United States, identified and mapped by the Dutch researcher, whose Internet configurations leave them susceptible to hacking. Health care, transportation, agriculture, defense—no system is exempt. Indeed, all the critical infrastructure that undergirds much of our lives, from the water we drink to the electricity that keeps the lights on, is at risk of being held hostage or decimated by hackers working on their own or at the behest of an adversarial nation. According to a study of the United States by the insurance company Lloyd’s of London and the University of Cambridge’s Centre for Risk Studies, if hackers were to take down the electric grid in just fifteen states and Washington, D.C., 93 million people would be without power, quickly leading to a “rise in mortality rates as health and safety systems fail; a decline in trade as ports shut down; disruption to water supplies as electric pumps fail and chaos to transport networks as infrastructure collapses.” The cost to the economy, the study reported, would be astronomical: anywhere from $243 billion to $1 trillion. Sabotaging critical infrastructure may not be as great an existential threat as climate change or nuclear war, but it has imperiled entire populations already and remains a persistent probability.

From 2011 to 2013 Iranian hackers breached the control system of a small dam outside of New York City. Around the same time, they also broke into the servers of banks and financial firms, including JPMorgan Chase, American Express, and Wells Fargo, and besieged them for 144 days. The attacks were in retaliation for the Stuxnet virus, unleashed in 2010, which caused the destruction of nearly a thousand centrifuges at Iran’s largest uranium enrichment facility. Though neither the United States nor Israel took credit for the attack, both countries are widely believed to have created and deployed the malware that took over the facility’s automated controllers and caused the centrifuges to self-destruct. The attack was intended to be a deterrent—a way to slow down Iran’s nuclear development program and force the country to the negotiating table.

If it hadn’t worked, a more powerful cyberweapon, Nitro Zeus, was being held in reserve, apparently by the US, primed to shut down parts of the Iranian power grid, as well as its communications systems and air defenses. Had it been deployed, Nitro Zeus could have crippled the entire country with a cascading series of catastrophes: hospitals could not have been able to function, banks could have been shuttered and ATMs could have ceased to work, transportation could have come to a standstill. Without money, people might not have been able to buy food. Without a functioning supply chain, there would have been no food to buy. The many disaster scenarios that could have followed are not hard to imagine and can be summed up in just a few words: people would have died.

When government officials like the director of the US Defense Intelligence Agency, Lieutenant General Robert Ashley, say they are kept up at night by the prospect of cyberwarfare, the vulnerability of industrial control systems is likely not far from mind. In 2017 Russian hackers found their way into the systems of one hundred American nuclear and other power plants. According to sources at the Department of Homeland Security, as reported in The New York Times, Russia’s military intelligence agency, in theory, is now in a position “to take control of parts of the grid by remote control.”

More recently, it was discovered that the same hacking group that disabled the safety controls at a Saudi Arabian oil refinery in 2017 was searching for ways to infiltrate the US power grid. Dragos, the critical infrastructure security firm that has been tracking the group, calls it “easily the most dangerous threat publicly known.” Even so, a new review of the US electrical grid by the Government Accountability Office (GAO) found that the Department of Energy has so far failed to “fully analyze grid cybersecurity risks.” China and Russia, the GAO report states, pose the greatest threat, though terrorist groups, cybercriminals, and rogue hackers “can potentially cause harm through destruction, disclosure, modification of data, or denial of service.” Russia alone is spending around $300 million a year on its cybersecurity and, in the estimation of scholars affiliated with the New America think tank, has the capacity to “go from benign to malicious rapidly, and…rapidly escalate its actions to cyber warfare.”

Advertisement

It’s not just Russia. North Korea, Iran, and China all have sophisticated cyberwarfare units. So, too, the United States, which by one account spends $7 billion a year on cyber offense and defense. That the United States has not advocated for a ban on cyberattacks on critical infrastructure, the Obama administration’s top cybersecurity official, J. Michael Daniel, tells Greenberg in Sandworm, may be because it wants to reserve that option itself. In June David Sanger and Nicole Perlroth reported in The New York Times that the United States had increased its incursions into the Russian power grid.

There are no rules of engagement in cyberspace. Like cyberspace itself, cyberwarfare is a relatively new concept, and one that is ill-defined. Greenberg appears to interpret it liberally, suggesting that it is a state-sponsored attack using malware or other malicious software, even if there is no direct retaliation, escalation, or loss of life. It may seem like a small semantic distinction, but cyberwarfare is not the same as cyberwar. The first is a tactic, the second is either a consequence of that tactic, or an accessory to conventional armed conflicts. (The military calls these kinetic combat.) This past June, when the United States launched a cyberattack on Iran after it shot down an American drone patrolling the Strait of Hormuz, the goal was to forestall or prevent an all-out kinetic war. Responding to a physical attack with a cyberattack was a risk because, as Amy Zegart of Stanford’s Hoover Institute told me shortly afterward, we don’t yet understand escalation in cyberspace.

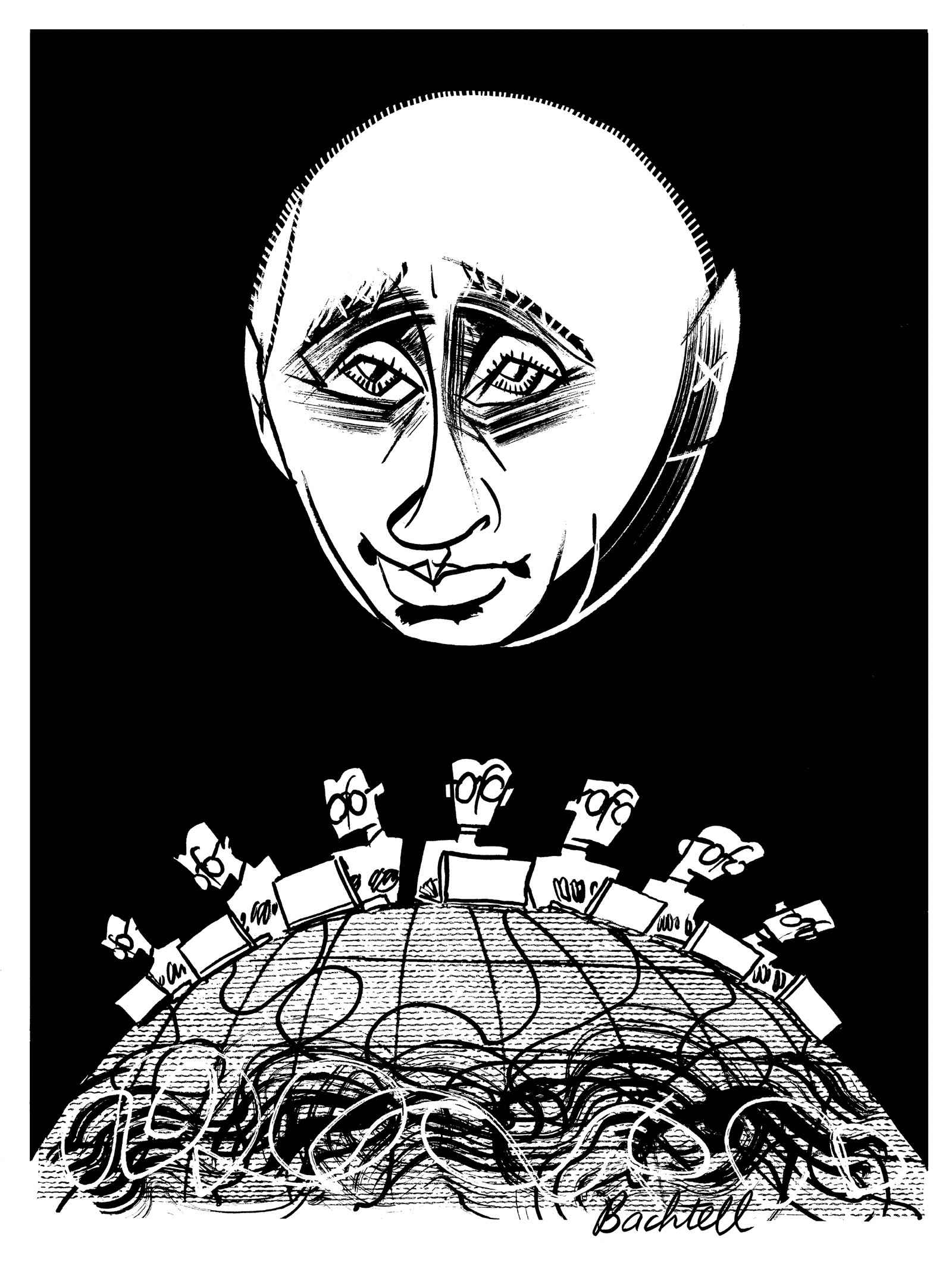

Absent rules of engagement, nation-states have a tremendous amount of leeway in how they use cyberweapons. In the case of Russia, cyberwarfare has enabled an economically weak country to pursue its ambitious geopolitical agenda with impunity. It has used cyberattacks on industrial control systems to cripple independent states that had been part of the Soviet Union in an effort to get them back into the fold, while sending a message to established Western democracies to stay out of its way.

As Russia has attacked, Greenberg has not been far behind, reporting on these incursions in Wired while searching for their perpetrators. Like the best true-crime writing, his narrative is both perversely entertaining and terrifying.

In 2007 Estonia, one of the most technologically advanced countries in the world, was hit with a cyberattack that shuttered media, banking, and government services over twenty-two days. As the BBC reported at the time, “The result for Estonian citizens was that cash machines and online banking services were sporadically out of action; government employees were unable to communicate with each other on e-mail; and newspapers and broadcasters suddenly found they couldn’t deliver the news.” Russia denied committing the attack, but there was little question of its provenance since it occurred shortly after officials in Tallinn relocated a Soviet-era war monument from the center of the city to a cemetery on its outskirts. The move incensed ethnic Russians within Estonia and their patrons in the Kremlin, and the cyberattack followed days later. More than a humiliating public spanking for Estonia, the attack was a test of the West’s resolve: Would it intervene and come to the aid of one of the newest members of NATO? The answer would matter not just to Estonia, but to other states that had gained independence after the collapse of the Soviet Union—those, like Latvia and Lithuania, that had also joined NATO, and those, like Georgia and Ukraine, that aspired to join. Article 5 of the NATO charter, which requires signatories to defend one another in the event of a cyberattack, is only put into effect if the attack results in a major loss of life. So when NATO stood aside, Russia was emboldened to do it again.

It didn’t take long. The following year, Greenberg writes, Georgia was hit with a distributed denial of service (DDOS) attack that took down media outlets and military command centers. With the government struggling to communicate with its citizens and the outside world, Russia commandeered the airwaves, broadcasting its own version of events. The hackers themselves were freelancers, recruited from social media sites, which gave Russia plausible deniability, making retaliation that much harder. When Russian troops invaded the country about a month later, the hackers had prepared the battleground. It was the first time cyberwarfare was used to initiate an armed conflict, and once fighting was underway, the cybermercenaries supported the efforts of Russian troops on the ground by undermining basic services. Having used cyberwarfare in Estonia to test and then demonstrate the West’s disinclination to check its power, Russia’s attack on Georgia upped the ante, using those weapons to advance a territorial claim—again with little resistance from the West.

Advertisement

Ukraine was next. In the spring of 2014, during the lead-up to Ukraine’s presidential election—not long after Russia annexed Crimea in the southern part of the country, and as its troops advanced on the eastern region of Donbas—a pro-Russian hacking group called CyberBerkut began an assault on the country’s central election system. Though ultimately unsuccessful—the elections were able to proceed, with Petro Poroshenko winning the vote against the far-right nationalist Dmytro Yarosh—the attack had another purpose, one that Americans, especially, will find familiar: to throw doubt on the legitimacy of the democratic process. This was reinforced when Russian state television congratulated the wrong victor before the results had been tallied. Greenberg guides the reader through the tortured history of what then became nearly annual cyberstrikes on Ukraine, including the one about a year later, in 2015, when hackers took down a Kiev-based television company just ahead of local elections, and also shut down the airport and railroads, stranding travelers and preventing them from finding out what was going on; the one right before Christmas Eve that year, when hackers disabled the power grid in the western part of the country, along with the backup battery system that, in an ordinary power outage, would have enabled electricity to keep on flowing. Suddenly there was nothing—no heat, no transportation, no way to get money or use a credit card, little food. It was the first known case, according to Greenberg, “of an actual hacker-induced blackout.”

The initial 2014 cyberattack on Ukraine was delivered by a piece of malware embedded in a PowerPoint attachment directed at government officials coincident with a NATO summit in Wales about Ukraine. The December 2015 attack was set off by malware loaded into a Word document attached to an e-mail claiming to be a message from Parliament. Hackers often rely on careless or credulous people to gain access to a system and spread a virus—that’s how they were able to steal e-mails from John Podesta and other members of Hillary Clinton’s campaign staff in 2016.

In Ukraine, the virus implanted in the attachments was a variant of BlackEnergy, malware first created by a Russian hacker to flood websites with so many requests for information that they would crash—a standard DDOS attack—and offered for sale on Russian-language dark net forums for about $40. Much like the viruses that invade our bodies, the ones that infiltrate computers often evolve over time, becoming more virulent and complex. By the time BlackEnergy was unleashed in Kiev just before the 2014 elections, it could also steal banking information, destroy computer files, and seize industrial control systems. In its next iteration, developers loaded it with a host of new functions, including the ability to record keystrokes, take screenshots, and steal files and encryption keys, turning the malware into a tool for spying as well as for destruction. The cybersecurity researchers tracking BlackEnergy and reverse engineering its code couldn’t say who was augmenting its features, but they had a good idea where the hackers came from when they discovered that BlackEnergy’s “how-to” files were written in Russian.

Typically, hackers are more careful and cover their tracks. The use of non-state hackers in Georgia, for example, allowed the Kremlin to distance itself from the cyberattack. The recent discovery, reported by Greenberg in Wired, that Russian hackers had expropriated Iranian hacking tools and were impersonating Iranian hackers to attack academic, government, and scientific institutions in thirty-five countries is an object lesson on how hackers, either state sponsored or not, duck behind false flags. “Just a few years ago they were wearing clumsy masks,” Greenberg writes; “now they can practically wear another group’s identity as a second skin.” But back when researchers were trying to understand who was responsible for the original BlackEnergy attacks, the hackers were not so sophisticated: from clues left in their code, researchers noticed that they were fans of the 1965 science fiction classic Dune. Paying homage, the researchers nicknamed the group “Sandworm,” after a monstrous creature in the book that slithers along under the surface of the desert, rising up randomly to terrorize and wreak havoc. They did not know who Sandworm was, or where or when it would strike again—only that, odds were, it would.

Greenberg leans heavily on the detective work of cybersecurity researchers scattered around the world, many of them veterans of intelligence agencies now working for private firms. Their forensic skills enable them to parse Sandworm’s ever-evolving code, reading it like a manuscript whose author keeps revising. By one account, there was evidence that Sandworm had been infiltrating critical infrastructure—some of it in the United States—since 2011 and had already developed a weapon that could knock it out. Back then the group was just lurking, biding its time. When it did unleash its first assault on the Ukrainian electrical grid four years later, it used BlackEnergy to enter the system and a second piece of software, KillDisk, to infect it. Then, just shy of the anniversary of that blackout, Sandworm surfaced again, this time hitting “an artery” of the power grid with a bot that forced open circuit-breakers and kept them open even as operators tried to shut them. As Greenberg describes it:

The hackers had, in other words, created an automated cyberweapon that performed the same task they’d carried out the year before, but now with inhuman speed. Instead of manually clicking through circuit breakers with phantom hands, they’d created a piece of malware that carried out that attack with cruel, machine-quick efficiency.

That attack, while powerful, was brief, lasting just an hour before systems engineers could disable it, but its public relations value was appreciable. It showed the world what Russia was capable of doing if anyone were to challenge it.

There was more to come, because four months earlier, a group calling itself “the Shadow Brokers” had announced on Twitter that it had stolen a cache of cyberweapons from the National Security Agency that it was offering for sale to the highest bidder. To entice buyers, the Shadow Brokers made some NSA tools available to anyone—criminal syndicates, nation-states, terrorists, lone-wolf hackers—for free. The most lethal of these targeted vulnerabilities that had been discovered a few years earlier, which meant that software patches for them already existed. But patches only work if they are applied by the user, and too often they are not. The hardware manufacturer Cisco, for instance, warned millions of customers that if their devices hadn’t already been patched, they were in danger of having their Internet traffic intercepted or manipulated.

The auction itself was a bust—the Shadow Brokers took in less than $1,000—but maybe money wasn’t the point. Two months after the initial release, the group dropped a second, smaller cache, this one revealing the location of NSA outposts around the world. And then, in April 2017, the Shadow Brokers released a program called Eternal Blue that gave hackers access to every version of the Windows operating system up to Windows 8—leaving “millions upon millions” of computers vulnerable. Though Microsoft, tipped off to the theft, had already released a software patch, it hardly mattered. It didn’t take long before hackers, thought to be from North Korea, infiltrated Britain’s National Health Service and, with a new piece of malware called NotPetya, held it for ransom.

Two months later, on June 27, Eternal Blue was used to advance another NotPetya attack that was, according to one cybersecurity researcher, “the fastest-propagating piece of malware we’ve ever seen.” Within minutes, it spread globally, toppling the worldwide shipping giant Maersk, the pharmaceutical multinational Merck, and the parent company of Nabisco and Cadbury, among many others. “For days to come, one of the world’s most complex and interconnected distributed machines, underpinning the circulatory system of the global economy itself, would remain broken,” Greenberg writes of the attack on Maersk, calling it “a clusterfuck of clusterfucks.” The company was only able to get its ships and ports back in operation after nearly two weeks and hundreds of millions of dollars in losses, when an office in Ghana was found to have the single computer that hadn’t been connected to the Internet at the time of the attack.

Ukraine, too, was a target of the Eternal Blue/NotPetya attack, and this time the outcome was pandemic:

It took forty-five seconds to bring down the network of a large Ukrainian bank. A portion of one major Ukrainian transit hub…was fully infected in sixteen seconds. Ukrenergo, the energy company…had also been struck yet again…the effect was like a vandal who first puts a library’s card catalog through a shredder, then moves on to methodically pulp its books, stack by stack.

By the end of the day, four hospitals, six power companies, two airports, more than twenty-two banks, and just about the entire Ukrainian federal government had been compromised. When a cybersecurity researcher examined the infected code, he saw that, in addition to Eternal Blue, it was also related to the KillDisk attacks from 2015. “From the attacker’s perspective, I could see the problem,” he told Greenberg. “KillDisk doesn’t spread itself. They were testing this tactic: how to find more victims, looking for the best infection vectors.”

The first time the lights went out in Ukraine, in 2015, American officials dismissed the possibility that such an attack could happen in the United States. They also dismissed the possibility that the US election system could be attacked, claiming it was too decentralized—an argument that continued to be made even after it was discovered to have been hacked during the 2016 presidential election. The timing was auspicious. Not long after the initial assault on the Ukrainian electric grid, Russian hackers began infiltrating the election system of every American state. They also made their way into at least one private election equipment vendor. The American intelligence community reported after the hack was discovered that no votes were changed, but this was a convenient fiction: the Department of Homeland Security did no forensic analysis of the state election systems, thirteen of which used paperless computerized voting machines that could not be audited.

Meanwhile, throughout his presidential campaign, Donald Trump constantly claimed that the system “was rigged” against him. Russia’s Ukrainian playbook—to weaken democracy by questioning the legitimacy of its elections—had been successfully exported. Whether votes were changed or not was, in a sense, immaterial: the elevation of Donald Trump to the presidency in the midst of Russian assaults on the election system and manipulation of the electorate through social media succeeded in undermining trust in the foundation of American government.

When, in July 2018, the Department of Justice, at the direction of the special prosecutor, Robert Mueller, indicted twelve Russian intelligence officers for interfering in the 2016 presidential election, the indictment revealed that two separate units of the GRU—Russia’s main military intelligence agency—were responsible for penetrating state election systems, hacking the Democratic National Committee officials’ email, sharing them with Wikileaks, and pursuing a concerted disinformation campaign on social media. The Mueller investigation determined that one unit, 26165, also known as Fancy Bear or Apt 28, was responsible for stealing documents by hacking into accounts and inserting malware. The other, Unit 74455, distributed those documents stole e-mails, and ran the disinformation operation. Unit 74455 also hacked into state boards of elections and secretaries of state.

Years after cybersecurity researchers—with Andy Greenberg on their heels—began tracking Sandworm, looking for clues in the malicious code it had unleashed in Ukraine, Georgia, Estonia, the United States, and elsewhere, they finally knew who Sandworm was: not Unit 26165, which appeared to be the digital bomb-throwers, but its more covert partner, Unit 74455. It had not occurred to them that whoever had written the code might not be the ones deploying it.

No one expected the Kremlin to hand over GRU officers to the Justice Department—and they haven’t—but the indictments were valuable nonetheless. They identified the perpetrators, located them within Russian intelligence, and revealed the mechanics of their American operation. By then, of course, the fact of the hacking was well established and American elections had been added to the Department of Homeland Security’s list of “critical infrastructure” that must be protected in cyberspace as well as in the physical universe. Voting may seem very different from wastewater treatment, but as one cybersecurity researcher told Greenberg, “just as election hacking is meant to rattle the foundations of citizens’ trust that their democracy is functioning, infrastructure hacking is meant to shake their faith in the fundamental security of their society.” So far, cyberwarfare has been fundamentally psychological warfare. Cyberwar will not be.

This Issue

December 19, 2019

No More Nice Dems

What Were Dinosaurs For?

The Master’s Master

-

*

He intended to present his findings at a cybersecurity conference in Atlanta, but when visa problems prevented him from attending, he decided to publish them online, in a blog post. ↩