PowerHouse Books

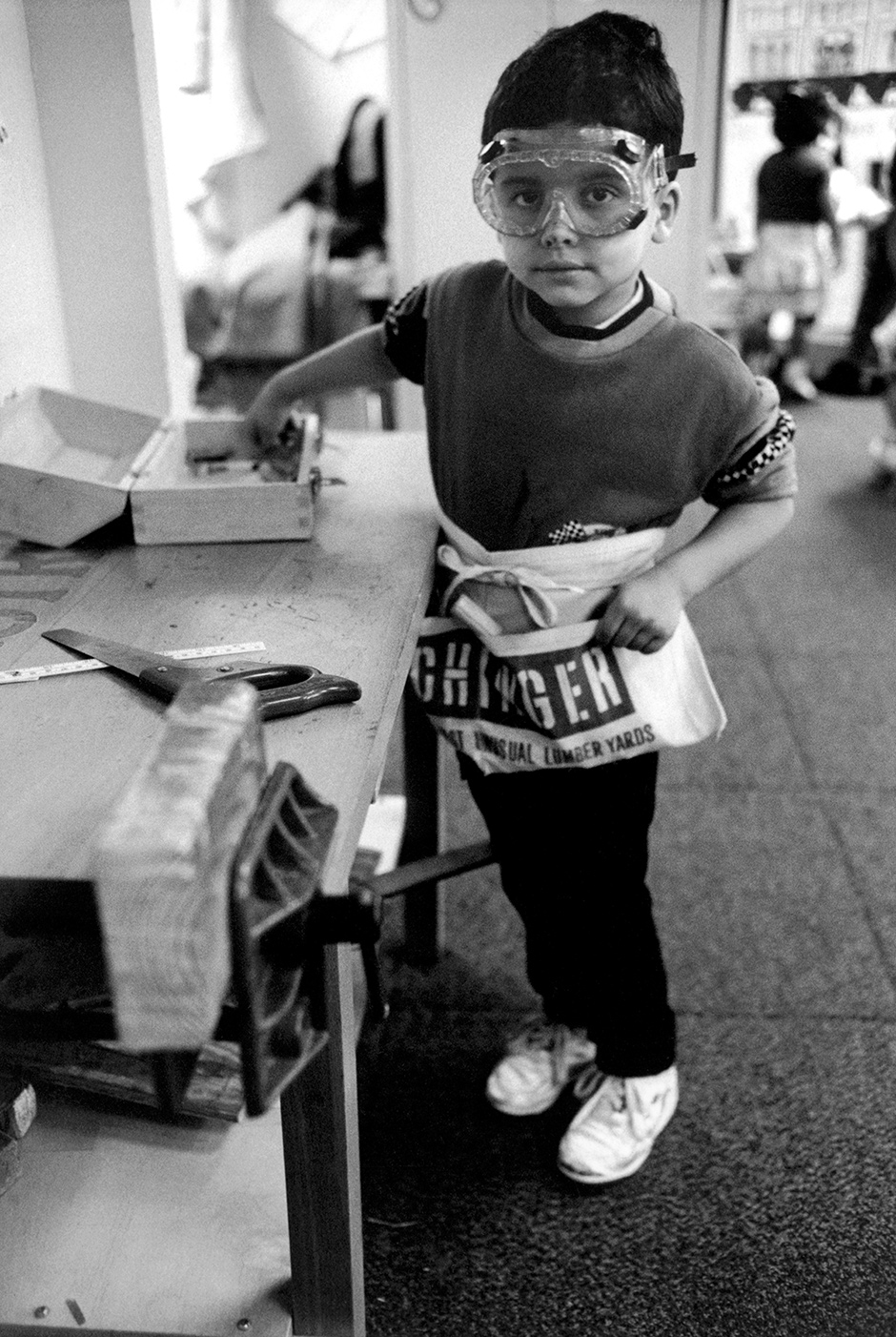

Shadows of residents of a housing project in Red Hook, Brooklyn, 2011; photograph by Jared Wellington, a twelve-year-old workshop participant, from Project Lives: New York Public Housing Residents Photograph Their World. Edited by George Carrano, Chelsea Davis, and Jonathan Fisher, it has just been published by PowerHouse Books.

Although an accurate estimate of how the poverty rate has changed since 1964 would show that we are much closer to achieving President Lyndon Johnson’s original goal of eliminating poverty than most readers of this journal probably believe, it would not tell us how effective specific antipoverty programs have been. The poverty rate could have declined despite the War on Poverty, not because of it. Assessing specific strategies for reducing poverty is the main task that Martha Bailey and Sheldon Danziger take on in Legacies of the War on Poverty.

When Johnson initiated the War on Poverty, he reportedly said that its political success depended on avoiding handouts. The initial focus was therefore on equalizing opportunity by helping poor children acquire the skills they would need to get steady jobs with at least average pay. I will discuss three of these programs: Head Start for poor preschoolers, Title I funding for public schools with high concentrations of poor children, and financial aid for low-income college students (now known as Pell Grants).

Any program aimed at young people, no matter how successful, inevitably takes a long time to change the poverty rate. Johnson knew that the War on Poverty’s political survival was also likely to require programs that produced more immediate results, so despite his worries about “handouts” he began looking for ways to cut the poverty rate by improving the “safety net” for the poor. I will discuss two of these programs: the big increase in Social Security benefits for the elderly and food stamps.

1.

Head Start

Head Start was primarily a program in which children attended preschool for a year or two, before they went to kindergarten. The Perry Preschool, which opened in the early 1960s in Ypsilanti, Michigan, is probably the most famous preschool in American history. It was a true experiment, selecting its pupils randomly from a pool of poor black three-year-olds and following both those admitted to Perry (the “treatment” group) and those not admitted (the “control” group) for many years.

After two years in the Perry program its graduates did much better on IQ and vocabulary tests than the control group, making Perry an important inspiration for Head Start. As time passed, however, the test score gap between Perry’s treatment and control groups shrank. Eventually the treatment group’s advantage was so small that it could have arisen by chance.

Almost every evaluation of Head Start has also found this same pattern. Children who spend a year in Head Start make more academic progress during that year than similar children who are not enrolled in preschool. Almost every follow-up has also found that once children enter kindergarten or first grade the Head Start graduates’ test score advantage shrinks, and over time it often disappears. For many years people who paid attention to this research (including me) interpreted it as showing that Head Start doesn’t work.

Nonetheless, Head Start’s reputation remained high among parents, and the program survived. Over the past decade its reputation has also risen dramatically among those who keep abreast of new research, largely because longer-term follow-ups have allowed evaluators to take a broader view of what differentiates a “good” school from a “bad” school. A follow-up of Perry Preschool children at age twenty-seven found more than three decades ago that the treatment group was significantly less likely than the control group to have been arrested for either a serious or not-so-serious crime. In addition, boys had significantly higher monthly incomes and were significantly less likely to smoke, while girls were significantly less likely to have been put into special education or to have used drugs.1

In the chapter on Head Start in Legacies, Chloe Gibbs, Jens Ludwig, and Douglas Miller also summarize several recent studies of its long-term impact. In 2002 Eliana Garces, Duncan Thomas, and Janet Currie reported a comparison of siblings born between 1965 and 1977, one of whom had been in Head Start and one of whom had not. Just as in Perry, test score differences between the Head Start children and the control group declined with age. But when they compared white siblings, those who attended Head Start were 22 percentage points more likely to finish high school and 19 percentage points more likely to enter college than those who did not attend Head Start. Among black siblings, Head Start had little effect on high school graduation or college attendance, but blacks who had been in Head Start were 12 percentage points less likely to have been arrested and charged with a crime than those who had not been in Head Start.

Advertisement

In 2009 David Deming reported a similar study of siblings born between 1980 and 1986. Again the Head Start graduates’ test score advantage shrank as they got older, but those who had attended Head Start were less likely to have been diagnosed with a learning disability, less likely to have been held back a year in school, more likely to have graduated from high school, and more likely to report being in good health than their siblings who had not been in preschool.

These studies do not tell us why Head Start graduates fare better in adolescence and early adulthood. One popular theory is that Head Start helps children develop character traits like self-control and foresight that were not measured in early follow-ups but persist for a long time.2 Schools spend hundreds of hours teaching math and reading every year, so the advantage enjoyed by a sibling who attended Head Start becomes a smaller fraction of their total experience the longer they have been in school. In contrast, once traits like self-control and foresight are acquired, they may be more likely to persist and strengthen over time as their benefits become more obvious. Such theories are speculative, however.

Although the Head Start story still contains puzzles, it certainly suggests that holding elementary and middle schools accountable only for their students’ reading and math scores, as the Bush and Obama administrations have tried to do since 2001, misses much of what matters most for children’s futures. The Head Start story also suggests that figuring out what works can take a long time. This is a serious problem in a nation with a two-year electoral cycle and a twenty-second attention span. Had parents not embraced Head Start, we might well have abolished it before we discovered that, in important ways, it worked.

Title I

Supplementary federal funding for schools with high concentrations of poor students has been administered in a way that makes conclusive judgments about its impact on children very difficult. The chapter of Legacies by Elizabeth Cascio and Sarah Reber suggests that the federal payments to poor schools under Title I helped reduce spending disparities between rich and poor school districts in the 1960s and 1970s, but not after 1990. Nor does Title I seem to have reduced expenditure differences between schools serving rich and poor children in the same district. Finally there is no solid evidence that Title I reduced differences between rich and poor school districts on reading or math tests. If there was any such impact, it was small.

Title I did, however, give the federal government more leverage when it wanted to force southern school districts to desegregate in the 1970s. Threats to withhold Title I funding induced many recalcitrant southern school districts to start mixing black and white children. School desegregation in the South appears to have been linked to gains in black students’ academic achievement. Title I also gave Washington more leverage over the states in 2001, when the Bush administration and Congress wanted to make every state hold its public schools accountable for raising reading and math scores. How well that worked remains controversial.

Financial Aid for College Students

The War on Poverty led to a big increase in federal grants and loans to needy college students. Bridget Terry Long’s chapter in Legacies shows that if we exclude veterans’ benefits and adjust for inflation, federal grants and loans to college students rose from $800 million in 1963–1964 to $15 billion in 1973–1974 and $157 billion in 2010–2011.3 Most of that increase took the form of low-interest loans backed by the federal government, not outright grants. Much of it also went to students from families with incomes well above the poverty line. But Pell Grants to relatively poor students began to grow after 1973–1974 and totaled $35 billion by 2010–2011.

The initial goal of all this federal spending was to reduce the difference between rich and poor children’s chances of attending and completing college. That has not happened. In the mid-1990s Congress broadened federal financial aid to include middle-income families, reducing low-income students’ share of the pot. But the college attendance gap had been widening even before that. Daron Acemoglu and Jörn-Steffen Pischke, for example, have compared the percentages of students in the high school classes of 1972, 1982, and 1992 who entered a four-year college within two years of finishing high school. Among students from the top quarter of income distribution, the percentage entering a four-year college rose from 51 to 66 percent between 1972 and 1992. Among those from the bottom quarter of the income distribution, the percentage rose only about half as much, from 22 to 30 percent.4

The same pattern recurs when we look at college dropout rates. Martha Bailey and Susan Dynarski have compared college graduation rates among students who were freshmen in 1979–1983 to the rates among those who were freshman eighteen years later, in 1997–2001.5 The graduation rate among the freshmen from the top quarter of the income distribution rose from 59 to 67 percent. Among freshmen from the bottom quarter of the income distribution, the graduation rate rose only half as much, from 28 to 32 percent. The gap between the graduation rates of high- and low-income freshmen therefore widened from 31 to 35 percentage points—a fairly small change, but clearly in the wrong direction.

Advertisement

Rising college costs are the most obvious explanation for the rising correlation between college attendance and parental income. Tuition and fees in public four-year colleges and universities rose twice as fast as the Consumer Price Index between 1979–1982, when Bailey and Dynarski’s first cohort was entering college, and 1997–2000, when their second cohort was entering.

Rising tuition reflected several political changes: growing opposition to tax increases among those who elect state legislators, growing competition for state tax revenue from prisons, hospitals, and other services, and the fact that federal financial aid now allowed low-income students to pay a larger fraction of their college costs.

The combination of rising college costs and growing reliance on student loans to pay those costs led to a sharp rise in debt among both low-income and middle-income students. For students who chose their college major with one eye on the job market and went on to earn a four-year degree, repaying loans was often difficult but seldom impossible. But two thirds of the low-income freshmen and about half of middle-income freshmen in four-year colleges leave without earning a four-year degree. After that, earning enough to both support themselves and repay their college loans often proves impossible, especially if they have majored in a subject like teacher education, sociology, or the arts, which employers tend to view as “soft.”

Low-income high school students became increasingly aware that a lot of students like them were having trouble paying back their loans. As a result, many students whose parents could not pay the full cost of college decided to postpone college, take a job, and go to college after they had saved enough to cover the cost. Few earned enough to do that. Others went to college for a year, got so-so grades, concluded that borrowing more to stay in college was too risky, and dropped out. As an experiment in social policy, college loans may not have been a complete failure, but they cannot be counted as a success.

Social Security

When Social Security was established in the 1930s, many occupations and industries were not part of the system. Those exemptions were narrowed over the next three decades, but benefits are still limited to those who have worked in employment that is covered by the system, and they still vary depending on how much one earned. As a result, almost everyone thinks Social Security recipients have “earned” their benefits, even when the amount they receive is far larger than what they would have gotten if they had invested the same amount of money in a private pension fund.

Nonetheless, the combined effect of not having covered all workers during Social Security’s early years and the low wages and sporadic employment of many people who retired in the 1940s and 1950 meant that about 30 percent of the elderly were below the poverty line in 1964. When President Johnson looked for ways to cut poverty quickly without giving money to those whom he thought voters saw as undeserving, he almost inevitably chose the elderly poor as his first target.

After adjusting for inflation, Congress raised Social Security’s average monthly benefits by about 50 percent between 1965 and 1973.6 These increases aroused little organized opposition, partly because workers who had not yet retired expected that making the system more generous today would benefit them too once they reached retirement age. In addition, many of the elderly poor were relying on their middle-aged children to make ends meet in 1964. Every increase in Social Security benefits reduced the economic burden on those children, many of whom were far from affluent.

Higher real benefits, combined with increases in the proportion of surviving retirees who had worked in covered employment long enough to qualify for full benefits, cut the poverty rate among the elderly from 30 percent in 1964 to 15 percent in 1974. By 1984 the official poverty rate was the same among the elderly as among the non-elderly. Today the poverty rate among the elderly is only two thirds that among eighteen- to sixty-four-year-olds and half that among children. Raising Social Security benefits was, in short, the simplest, least controversial, and most effective antipoverty program of the past half-century.

The Safety Net

The premise of a “safety net” is that a rich society should make sure all its members have certain basic necessities like food, shelter, and medical care, even if they have done nothing to earn these benefits. After all, the argument goes, we feel obligated to provide these necessities even to convicted felons. Yet the idea remains controversial in the United States, where many politicians and voters habitually describe such programs as providing handouts. The two major national programs that try to help almost everyone in need are Medicaid and food stamps. These programs enjoy far less political support than Social Security. Thus far, however, they have survived. Here, I concentrate on food stamps.

Jane Waldfogel begins her chapter of Legacies by describing the nutrition of poor Americans in the mid-1960s. Her most vivid evidence comes from a 1967 report by Raymond Wheeler, a physician who had traveled through the South along with various colleagues, providing medical examinations for poor black children. Wheeler’s report chronicles the symptoms of hunger and malnutrition that he and his colleagues saw. No one who read it was likely to forget the third-world conditions it reported. Read alongside the Agriculture Department’s summary of its 1965–1966 Household Food Consumption Survey, which estimated that over a third of all low-income households had poor diets, and the Selective Service System’s report on why it found so many young men from the South unfit for military service, Wheeler’s report helped broaden political support for federal action to reduce hunger. That support was reinforced by a 1968 CBS documentary on Hunger in America. Nonetheless, the response was slow.

The Johnson administration inherited a tiny pilot program, established in 1961 by the Kennedy administration, that offered food stamps to poor people in a handful of counties. Congress expanded that program in 1964, but it was still limited to a small number of poor counties. Coverage expanded fairly steadily over the next decade, but food stamps did not become available throughout the United States until 1975. Today the program provides a maximum monthly benefit of $185 for each family member. The amount falls as a family’s cash income rises. It does not pay for anything but food, but it is more than the United States provided before Johnson launched the War on Poverty.

Because food stamps became available at different times in different counties, Douglas Almond, Hilary Hoynes, and Diane Schanzenbach have been able to produce quite convincing estimates of how their availability affected recipients’ health.7 They show, for example, that living in a county where food stamps were available during the last trimester of a mother’s pregnancy reduced her likelihood of having an underweight baby. They also show that living in a county where food stamps were available for more months between a child’s estimated date of conception and its fifth birthday improved the child’s health in adulthood, reducing the likelihood of what is known as “metabolic syndrome” (diabetes, high blood pressure, obesity, and heart disease).8

Nonetheless, the politics of food stamps remain complicated. The program’s political survival and growth have long depended on a log-rolling deal in which urban legislators who favor food stamps agree to vote for agricultural subsidies that they view as wasteful if rural legislators who favor agricultural subsidies agree to vote for food stamps that they view as unearned handouts. That deal could easily unravel.

2.

If I am right that poverty as we understood the term in the 1960s has fallen by three quarters, Democrats should be mounting more challenges to Republican claims that the War on Poverty failed. A first step would be to fix the official poverty measure. A second step would be to come clean about which parts of the War on Poverty worked and which ones do not appear to have worked, and stop supporting the parts that appear ineffective.

On the one hand, there have clearly been more successes than today’s Republicans acknowledge, at least in public. Raising Social Security benefits played a major part in cutting poverty among the elderly. The Earned Income Tax Credit cut poverty among single mothers. Food stamps improve living standards for most poor families. Medicaid also improves the lives of the poor. Even Section 8 rent subsidies, which I have not discussed, improve living standards among the poor families lucky enough to get one, although the money might do more good if it were distributed in a less random way. Head Start also turns out to help poor children stay on track for somewhat better lives than their parents had.

On the other hand, Republican claims that antipoverty programs were ineffective and wasteful also appear to have been well founded in many cases. Title I spending on elementary and secondary education has had few identifiable benefits, although the design of the program would make it hard to identify such benefits even if they existed. Relying on student loans rather than grants to finance the early years of higher education has discouraged an unknown number of low-income students from entering college, because of the fear that they will not be able to pay the loans back if they do not graduate. Job-training programs for the least employable have also yielded modest benefits. The community action programs that challenged the authority of elected local officials during the 1960s might have been a fine idea if they had been privately funded, but using federal money to pay for attacks on elected officials was a political disaster.

The fact that the War on Poverty included some unsuccessful programs is not an indictment of the overall effort. Failures are an inevitable part of any program that requires experimentation. The problem is that most of these programs still exist. Job-training programs that don’t work still pop up and disappear. Title I of the Elementary and Secondary Education Act still pushes money into the hands of educators who do not raise poor children’s test scores. It has had little in the way of tangible results. Increasingly large student loans still allow colleges to raise tuition faster than family incomes rise, and rising costs still discourage many poor students from attending or completing college.

It takes time to produce disinterested assessments of political programs. The Government Accountability Office has done good assessments of some narrowly defined programs, but assessing strategic choices about how best to fight poverty has been left largely to journalists, university scholars, and organizations like the Russell Sage Foundation, which paid for Legacies. Scholars are not completely disinterested either, but in this case we can be grateful that a small group has helped us reach a more balanced judgment about a noble experiment. We did not lose the War on Poverty. We gained some ground. Quite a lot of ground.

—This is the second of two articles.

-

1

The best analysis of these effects appears in a recent paper by James Heckman, Rodrigo Pinto, and Peter Savelyev, “Understanding the Mechanisms Through Which an Influential Early Childhood Program Boosted Adult Outcomes,” The American Economic Review, Vol. 103, No. 6 (October 2013). ↩

-

2

See the paper by Heckman, Pinto, and Savelyev cited in footnote 1 for some evidence on this. ↩

-

3

These expenditures are reported in 2010 dollars. ↩

-

4

Daron Acemoglu and Jörn-Steffen Pischke, “Changes in the Wage Structure, Family Income, and Children’s Education,” European Economic Review, Vol. 45 (2001). ↩

-

5

Martha J. Bailey and Susan M. Dynarski, “Inequality in Postsecondary Education,” in Whither Opportunity?: Rising Inequality, Schools, and Children’s Life Chances, edited by Greg J. Duncan and Richard J. Murnane (Russell Sage Foundation, 2011). ↩

-

6

See Kathleen McGarry, “The Safety Net for the Elderly,” Legacies of the War on Poverty, Figure 7.1, p. 184. ↩

-

7

See Douglas Almond, Hilary W. Hoynes, and Diane Whitmore Schanzenbach, “Inside the War on Poverty: The Impact of Food Stamps on Birth Outcomes,” The Review of Economics and Statistics, Vol. 93, No. 2 (May 2011). ↩

-

8

See Hilary Hoynes, Diane Whitmore Schanzenbach, and Douglas Almond, “Long-Run Impacts of Childhood Access to the Safety Net,” Institute for Policy Research, Northwestern University, November 2012. ↩