1.

Facebook is a company that has lost control—not of its business, which has suffered remarkably little from its series of unfortunate events since the 2016 election, but of its consequences. Its old slogan, “Move fast and break things,” was changed a few years ago to the less memorable “Move fast with stable infra.” Around the world, however, Facebook continues to break many things indeed.

In Myanmar, hatred whipped up on Facebook Messenger has driven ethnic cleansing of the Rohingya. In India, false child abduction rumors on Facebook’s WhatsApp service have incited mobs to lynch innocent victims. In the Philippines, Turkey, and other receding democracies, gangs of “patriotic trolls” use Facebook to spread disinformation and terrorize opponents. And in the United States, the platform’s advertising tools remain conduits for subterranean propaganda.

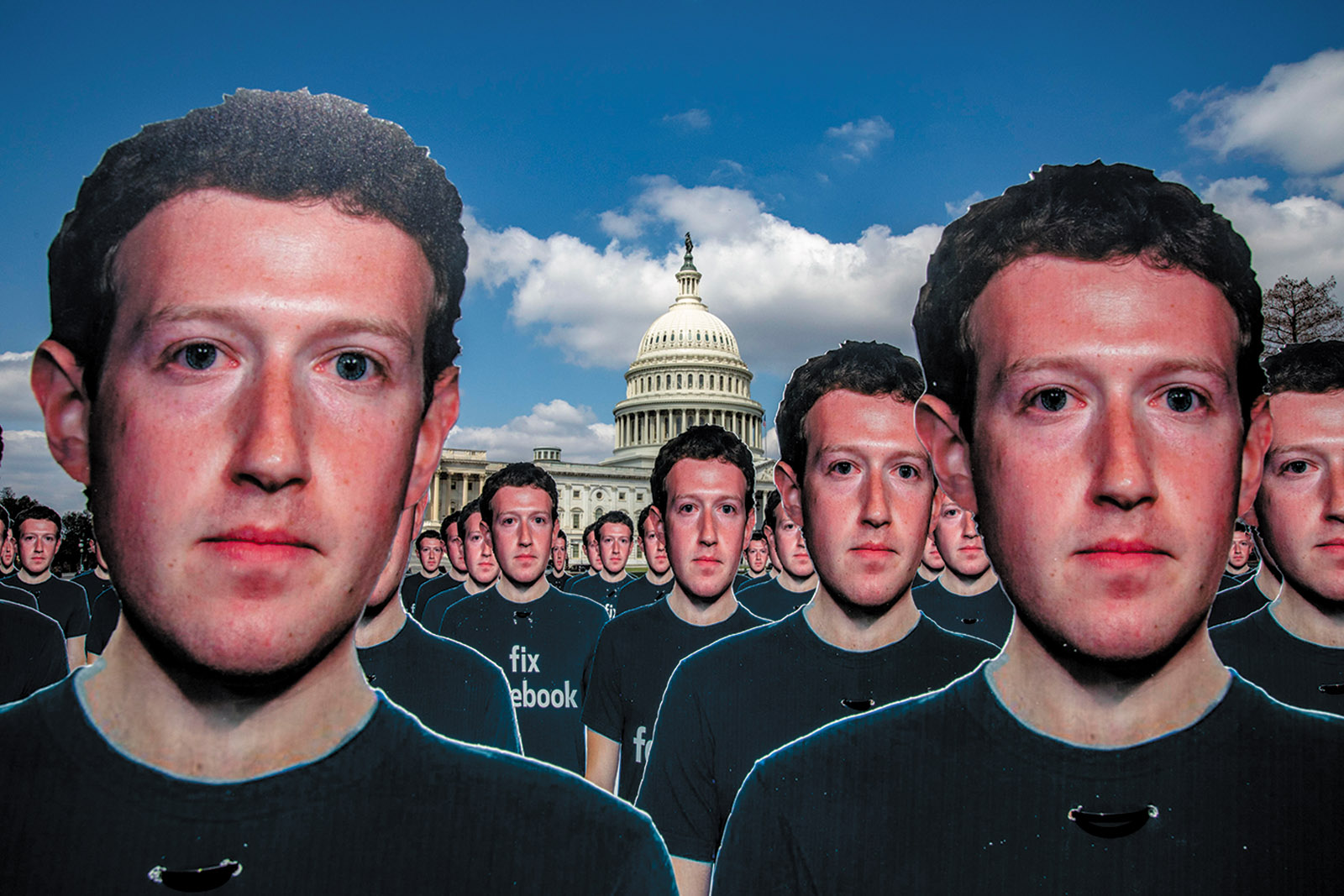

Mark Zuckerberg now spends much of his time apologizing for data breaches, privacy violations, and the manipulation of Facebook users by Russian spies. This is not how it was supposed to be. A decade ago, Zuckerberg and the company’s chief operating officer, Sheryl Sandberg, championed Facebook as an agent of free expression, protest, and positive political change. To drive progress, Zuckerberg always argued, societies would have to get over their hang-ups about privacy, which he described as a dated concept and no longer the social norm. “If people share more, the world will become more open and connected,” he wrote in a 2010 Washington Post Op-Ed. This view served Facebook’s business model, which is based on users passively delivering personal data. That data is used to target advertising to them based on their interests, habits, and so forth. To increase its revenue, more than 98 percent of which comes from advertising, Facebook needs more users to spend more time on its site and surrender more information about themselves.

The import of a business model driven by addiction and surveillance became clearer in March, when The Observer of London and The New York Times jointly revealed that the political consulting firm Cambridge Analytica had obtained information about 50 million Facebook users in order to develop psychological profiles. That number has since risen to 87 million. Yet Zuckerberg and his company’s leadership seem incapable of imagining that their relentless pursuit of “openness and connection” has been socially destructive. With each apology, Zuckerberg’s blundering seems less like naiveté and more like malignant obliviousness. In an interview in July, he contended that sites denying the Holocaust didn’t contravene the company’s policies against hate speech because Holocaust denial might amount to good faith error. “There are things that different people get wrong,” he said. “I don’t think that they’re intentionally getting it wrong.” He had to apologize, again.

It’s not just external critics who see something fundamentally amiss at the company. People central to Facebook’s history have lately been expressing remorse over their contributions and warning others to keep their children away from it. Sean Parker, the company’s first president, acknowledged last year that Facebook was designed to cultivate addiction. He explained that the “like” button and other features had been created in response to the question, “How do we consume as much of your time and conscious attention as possible?” Chamath Palihapitiya, a crucial figure in driving Facebook’s growth, said he feels “tremendous guilt” over his involvement in developing “tools that are ripping apart the social fabric of how society works.” Roger McNamee, an early investor and mentor to Zuckerberg, has become a full-time crusader for restraining a platform that he calls “tailor-made for abuse by bad actors.”

Perhaps even more damning are the recent actions of Brian Acton and Jan Koum, the founders of WhatsApp. Facebook bought their five-year-old company for $22 billion in 2014, when it had only fifty-five employees. Acton resigned in September 2017. Koum, the only Facebook executive other than Zuckerberg and Sandberg to sit on the company’s board, quit at the end of April. By leaving before November 2018, the WhatsApp founders walked away from $1.3 billion, according to The Wall Street Journal. When he announced his departure, Koum said that he was “taking some time off to do things I enjoy outside of technology, such as collecting rare air-cooled Porsches, working on my cars and playing ultimate Frisbee.”

However badly he felt about neglecting his Porsches, Koum was thoroughly fed up with Facebook. He and Acton are strong advocates of user privacy. One of the goals of WhatsApp, they said, was “knowing as little about you as possible.” They also didn’t want advertising on WhatsApp, which was supported by a 99-cent annual fee when Facebook bought it. From the start, the pair found themselves in conflict with Zuckerberg and Sandberg over Facebook’s business model of mining user data to power targeted advertising. (In late September, the cofounders of Instagram also announced their departure from Facebook, reportedly over issues of autonomy.)

Advertisement

At the time of the acquisition of WhatsApp, Zuckerberg had assured Acton and Koum that he wouldn’t share its user data with other applications. Facebook told the European Commission, which approved the merger, that it had no way to match Facebook profiles with WhatsApp user IDs. Then, simply by matching phone numbers, it did just that. Pooling the data let Facebook recommend that WhatsApp users’ contacts become their Facebook friends. It also allowed it to monetize WhatsApp users by enabling advertisers to target them on Facebook. In 2017 the European Commission fined Facebook $122 million for its “misleading” statements about the takeover.

Acton has been less discreet than Koum about his feelings. Upon leaving Facebook, he donated $50 million to the Signal Foundation, which he now chairs. That organization supports Signal, a fully encrypted messaging app that competes with WhatsApp. Following the Cambridge Analytica revelations, he tweeted, “It is time. #deletefacebook.”

2.

The growing consensus is that Facebook’s power needs checking. Fewer agree on what its greatest harms are—and still fewer on what to do about them. When Mark Zuckerberg was summoned by Congress in April, the toughest questioning came from House Republicans convinced that Facebook was censoring conservatives, in particular two African-American sisters in North Carolina who make pro-Trump videos under the name “Diamond and Silk.” Facebook’s policy team charged the two with promulgating content “unsafe to the community” and indicated that it would restrict it. Facebook subsequently said the complaint was sent in error but has never explained how that happened, or how it decides that some opinions are “unsafe.”

Democrats were naturally more incensed about the twin issues of Russian interference in the 2016 election and the abuse of Facebook data by Cambridge Analytica in its work for Trump’s presidential campaign. The psychological profiles Cambridge Analytica created with this data may have been snake oil, and it’s not entirely clear whether Trump’s digital team made use of them in its voter targeting efforts. But the firm’s ability to access so many private profiles has, more than anything else, prompted the current backlash against Facebook. Cambridge Analytica stands as proxy for a range of the company’s other damage: its part in spreading fake news, undermining independent journalism, and suppressing dissent and fomenting ethnic hatred in authoritarian societies. One might also mention psychological harms such as the digital addiction Sean Parker decried, the fracturing of attention that Tristan Harris campaigns against, and the loss of empathy Sherry Turkle has written about eloquently.1

In Antisocial Media, Siva Vaidhyanathan argues that the core problem is the harm Facebook inflicts on democracies around the world. A professor of media studies at the University of Virginia, Vaidhyanathan is a disciple of Neil Postman, the author of Amusing Ourselves to Death. In that prescient pre-Internet tract, Postman wrote that Aldous Huxley, not Orwell, portrayed the dystopia most relevant to our age. The dangers modern societies face, Postman contends, are less censorship or repression than distraction and diversion, the replacement of civic engagement by perpetual entertainment.

Vaidhyanathan sees Facebook, a “pleasure machine” in which politics and entertainment merge, as the culmination of Postman’s Huxleyan nightmare. However, the pleasure that comes from absorption in social media is more complicated than the kind that television delivers. It encourages people to associate with those who share their views, creating filter bubbles and self-reinforcing feedback loops. Vaidhyanathan argues that by training its users to elevate feelings of agreement and belonging over truth, Facebook has created a gigantic “forum for tribalism.”

He describes Zuckerberg’s belief that people ought to care more about Facebook’s power to “connect” them than about how it uses their data as a species of techno-narcissism, a Silicon Valley affliction born of hubris and missionary zeal. Its unquestioned assumption is that if people around the world use our tools and toys, their lives will instantly improve by becoming more like ours. This attitude is expressed in products like Free Basics, a mobile app that supplies no-cost access to Facebook and a small selection of other websites in developing countries. In 2016 India’s telecom regulator blocked the app on the basis that a Facebook-curated Internet violated the principle of net neutrality. Marc Andreessen, a Facebook board member, responded on Twitter that the ruling was an expression of India’s “economically catastrophic” anticolonialism—effectively casting Facebook as a beneficent neocolonial power. While both he and Zuckerberg later apologized, both evinced incomprehension that anyone would reject the irresistible bargain of free Facebook.

In the heady days of the Arab Spring, it was easy to get swept along by such naive good intentions and by the promise of social media as a benevolent political force. Wael Ghonim, an Egyptian Google employee, used a Facebook page as an organizing tool in the revolution that overthrew Hosni Mubarak in 2011. But that early enthusiasm, Vaidhyanathan writes, “blinded many to the ways social media—especially Facebook—could be used by authoritarian governments to surveil, harass, and suppress dissidents.” Egypt is only one of the places where the digital levers of the opposition became the cudgels of the regime, once it discovered how useful they were for disseminating propaganda and monitoring dissent. After the Egyptian revolution was hijacked and then reversed, Ghonim reconsidered his enthusiasm for Facebook. “Social media only amplified that state by amplifying the spread of misinformation, rumors, echo chambers, and hate speech,” he said in a 2015 TED talk. “The environment was purely toxic. My online world became a battleground filled with trolls, lies, and hate speech.”

Advertisement

As Facebook proved a better tool for autocrats than for revolutionaries, the protest machine became a surveillance and disinformation machine. In Cambodia, Hun Sen’s government has poured money into Facebook advertising to build up an inflated following, while an “experiment” the company performed in six small countries—moving news content out of the primary Newsfeed into a separate “Explore” section—made independent media sources all but invisible. In the Philippines, where the average user spends nearly four hours a day on social media, Rodrigo Duterte’s government has carried out a campaign of legal harassment against the brave news start-up Rappler—which is, of course, mainly distributed via Facebook.

The list of countries in which the company has effectively taken sides against the opposition is very long. When complaints from human rights groups grow loud enough, Facebook eventually responds, first by throwing up its hands and then by hiring regional specialists, expressing regret, and promising to “do more.” Such gestures barely help to level a playing field tilted in favor of autocrats.

When he turns to the 2016 US election, Vaidhyanathan is especially good on the details of how Facebook not so inadvertently assisted the Trump campaign. “Project Alamo,” Trump’s digital operation, was far less sophisticated than Hillary Clinton’s. But precisely because it had so little digital expertise, Trump’s side relied heavily on Facebook employees who were provided to the Trump campaign as embedded advisers. Facebook supplies these technical experts to all large advertisers, and in Trump’s case it made sure to find ones who identified as Republicans (similar advisers were offered to the Clinton campaign but turned down). These technicians helped the campaign raise over $250 million and spend $70 million per month in the most effective way possible on the platform.

The best weapon of Trump’s digital chief Brad Parscale was something called “Custom Audiences from Customer Lists,” an advertiser product released by Facebook in 2014. This tool allowed the Trump campaign to upload Republican voter lists, match them with Facebook’s user database, and micro-target so-called dark posts to groups of as few as twenty people. Using Democratic voter lists, it used the same kind of finely tuned, scientifically tested messages to suppress votes, for example by sending Haitian-Americans in South Florida messages about Bill Clinton’s having failed to do enough for Haiti. And because Trump’s inflammatory messages generated such high rates of “engagement,” Facebook charged his campaign a small fraction of the prices Hillary Clinton’s had to pay for its Facebook advertising.

While it helped Trump cultivate precision toxins in digital petri dishes, Facebook was simultaneously undermining the old fact-based information ecology. As always, this destruction was incidental to Facebook’s goals of growing its user base, increasing engagement, and collecting more data. But much as it tries to do with individual users, Facebook got the news industry hooked. Publishers of newspapers and magazines understood that supporting the company’s constantly changing business priorities—Instant Articles on mobile, short-form video, live video, and so on—would lead to more traffic for their own pages and stories.

For a time, the benefit flowed in both directions. But last year, under pressure to stop promoting fake news, Facebook began downgrading published content as a whole in its News Feed algorithm, prompting sharp declines in revenue and layoffs at many media organizations. Since January, a new emphasis on what Facebook calls “trusted” sources has had perverse effects, boosting traffic for untrustworthy sites, including Fox News and The Daily Mail, while reducing it for more reliable news organizations like The New York Times, CNN, and NBC. The reasons are unclear, but it appears that Facebook’s opaque methodology may simply equate trust with popularity.

Vaidhyanathan does not think our concerns should stop with Facebook. He says that Apple, Amazon, Microsoft, and Google also share a totalizing aspiration to become “The Operating System of Our Lives.” But Facebook is what we should worry about most because it is the only one within range of realizing that ambition. It currently owns four of the top ten social media platforms in the world—the top four, if you exclude China and don’t count YouTube as a social network. Zuckerberg’s company had 2.2 billion monthly active users in June 2018, more than half of all people with Internet access around the world. WhatsApp has 1.5 billion, Facebook Messenger 1.3 billion, and Instagram 1 billion. All are growing quickly. Twitter, by comparison, has 330 million and is hardly growing.

What would the world look like if Facebook succeeded in becoming the Operating System of Our Lives? That status has arguably been achieved only by Tencent in China. Tencent runs WeChat, which combines aspects of Facebook, Messenger, Google, Twitter, and Instagram. People use its payment system to make purchases from vending machines, shop online, bank, and schedule appointments. Tencent also connects to the Chinese government’s Social Credit System, which gives users a score, based on data mining and surveillance of their online and offline activity. You gain points for obeying the law and lose them for such behavior as traffic violations or “spreading rumors online.”

Full implementation is not expected till 2020, but the system is already being used to mete out punishments to people with low scores. These include preventing them from traveling, restricting them from certain jobs, and barring their children from attending private schools. In the West online surveillance is theoretically voluntary, the price we pay for enjoying the pleasure machine—a privatized 1984 by means of Brave New World. It is possible to imagine a future in which Facebook becomes more and more integrated into finance, health, and communications, and becomes not just a way to waste time but a necessity for daily life.

3.

What is to be done about this blundering cyclops? Jaron Lanier’s proposal is a consumer movement. Lanier emerged in Silicon Valley a decade ago as a humanist critic of his fellow techies. One of the inventors of virtual reality in the 1980s, he has been playing the role of a dreadlocked, digital Cassandra in a series of manifestos expressing his disappointment that digital technology has turned out to detract from human interaction more than enhance it. In Ten Arguments for Deleting Your Social Media Accounts Right Now, Lanier proposes that we follow his lead in opting out of Facebook, Twitter, Snapchat, Instagram, and any other platform that exploits user data for purposes of targeted advertising, as opposed to charging users a small fee, the way WhatsApp used to.

Lanier’s strained acronym for the business model he objects to is BUMMER, which stands for “Behaviors of Users Modified, and Made into an Empire for Rent.” BUMMER companies are those that work ceaselessly to addict their users, collect information about them, and then resell their attention to third parties. Lanier makes his case at the level of detail you’d expect from a critic boycotting his own subject. But drawing mainly on his own past experiences and examining recent news, he’s able to make a persuasive case that addiction to social media makes people selfish, disagreeable, and lonely while corroding democracy, truth, and economic equality.

His solution, on the other hand, is wildly inadequate to the immensity of the problem. Refusal to participate in digital services that don’t charge their customers, which Lanier says should be our rule, is little more than a juice fast for the social media–damaged soul. More than two thirds of American adults use Facebook. Over time, changing consumer preferences may erode this monopoly; use among US teenagers appears to be in sharp decline. However, much of the shift is to Instagram and Messenger, other social platforms that are owned by, and share data with, Facebook.

Unless social media companies follow Lanier’s prompt to shift their business model to user fees, we’ll need to restrain their exploitation of data through regulation. Until recently, such constraint came almost exclusively from Europe, where the wide-ranging General Data Protection Regulation (GDPR) recently went into effect. Attitudes in the US may be shifting, however. In June Governor Jerry Brown signed a data privacy act extending GDPR-type protections to Californian consumers, although this law won’t take effect for another two years. And at the federal level, a series of investigations into the Cambridge Analytica breach are underway, including one by the Federal Trade Commission into whether Facebook violated a 2011 settlement in which it agreed to offer privacy settings to users. Facebook has disclosed that separate, overlapping investigations are underway at the FBI, Justice Department, and Securities and Exchange Commission.

It will be difficult to limit the company’s power. Under what has been called “surveillance capitalism,” social media companies that provide free products always have an incentive to violate privacy. That is how Facebook makes money. Because Facebook profits by making more personal data available for use by third parties, its business model points in the direction of abuse. In the case of its 2011 FTC agreement, Facebook appears to have simply sloughed off a legal obligation, preferring to risk fines rather than accept an impediment to growth. There is little reason to think it won’t make the same choice again.2

Regulation might make Facebook still more powerful. Network effects, which make a service like Facebook more valuable to users as it grows larger, incline social media companies toward monopoly. The costs of legal compliance for rules like the GDPR, which can be ruinous for smaller start-ups, tend to lock in the power of incumbents even more. Unlike smaller companies, Facebook also has the ability to engage in regulatory arbitrage by moving parts of its business to the cities, states, and countries willing to offer it the largest subsidies and the lightest regulatory touch; it recently shifted its base of operations away from Ireland, where it had gone to avoid taxes, so that 1.5 billion users in Africa, Asia, Australia, and Latin America wouldn’t be covered by the GDPR. Zuckerberg and Sandberg have both said they expect regulation and would welcome the right kind—presumably regulation compatible with more users, more engagement, and more data.

What Facebook surely would not welcome is more vigorous antitrust enforcement. Blocking Facebook’s acquisitions of Instagram and WhatsApp were the best chances for the FTC to prevent the behemoth from becoming an ungovernable superpower. Reversing those decisions through divestiture or at least preventing these platforms from sharing customer data would be the best way to contain Facebook’s influence. At a minimum, the company should not receive approval to acquire any other social networks in the future.

But current antitrust doctrine may not be up to the task of taking on Facebook or the other tech leviathans. The problem is not establishing that Facebook, with 77 percent of US mobile social networking traffic, has a monopoly. It’s that under the prevailing legal standard of “consumer harm,” plaintiffs need to show that a monopoly leads to higher prices, which isn’t an issue with free products. When the Clinton-era Justice Department sued Microsoft in 1998, it argued the case on the novel grounds that the software giant was abusing its Windows monopoly to stifle innovation in the market for Web browsers. There is evidence that Facebook too has tried to leverage its monopoly to preempt innovation by copying its more inventive competitors, as when Instagram cribbed “Stories” and other popular features from Snapchat.

But the Microsoft precedent is not encouraging. After a federal judge found that Microsoft had abused its monopoly and ruled that it should be separated into two companies, the US Court of Appeals overturned the ruling on multiple grounds. The government has brought no comparably ambitious antitrust action against a major technology company in the two decades since. If the Justice Department were to become interested in breaking up Facebook, it would need the FTC to expand its definition of “consumer harm” to explicitly include violations of data privacy.

After the Cambridge Analytica scandal broke, Facebook took out full-page ads in leading newspapers to apologize for its “breach of trust.” In May, Zuckerberg took the stage at F8, Facebook’s annual gathering of partners and developers, to say he was sorry yet again. “What I’ve learned this year is that we need to take a broader view of our responsibility,” he said. “It’s not enough to just build powerful tools. We need to make sure that they’re used for good, and we will.” Zuckerberg says the company is now working to develop better artificial intelligence tools to weed out manipulated images and fake posts, although he says this could take a decade. At thirty-four, he’s got the time. We may not.

This Issue

October 25, 2018

The Suffocation of Democracy

Catching Up to Pauli Murray

-

1

See my “We Are Hopelessly Hooked,” The New York Review, February 25, 2016. ↩

-

2

For a comprehensive list of Facebook’s privacy violations, see Natasha Lomas, “A Brief History of Facebook’s Privacy Hostility Ahead of Zuckerberg’s Testimony,” TechCrunch, April 10, 2018. ↩