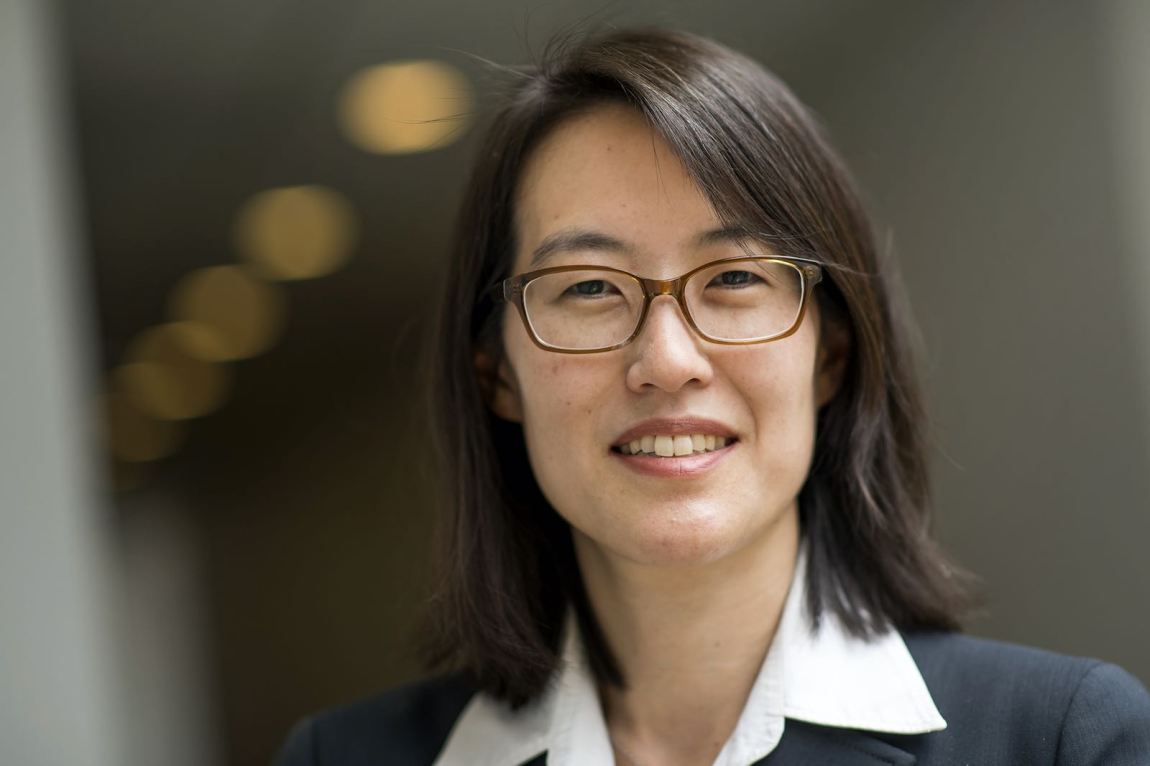

Ellen K. Pao was an unlikely troublemaker. A graduate of Princeton, Harvard Law School, and Harvard Business School, she was hired in 2005 by Silicon Valley’s leading venture capital firm, Kleiner Perkins. This electrical engineer, lawyer, and Mandarin speaker entered Kleiner as a partner.

So far, so expected. But in 2012, after she and other women executives were passed over for promotion, Pao filed a discrimination lawsuit against the company, claiming among things, sexual harassment, exclusion, and retaliation.

Three years later, the case finally went to trial. In the media reporting of that pre-#MeToo moment, Pao found herself depicted as an entitled ingrate who was taking advantage of discrimination laws meant to protect the less privileged. The jury sided with Kleiner Perkins.

It was all a bruising experience that tested her mettle and altered her worldview. “It made me see that high tech and venture capital were problematic industries,” she told me when we spoke, a few days ago.

That insight was one that guided the next step in her career, when she joined the social media and chat site Reddit in 2013. After she became interim CEO, in November 2014, she tried to take on what she saw as problematic about her own company. But the dominant ethos of Silicon Valley—one that reflected the libertarian values of its billionaire male executives and which had recently been brutally enforced by the Gamergate affair—meant that Pao’s new policies barring revenge porn and online bullying led to a vicious backlash: more than 200,000 Reddit users signed a petition calling for her removal. Pao resigned, leaving the company in July 2015.

Five years later, much has changed. She now runs a nonprofit she founded called Project Include, which consults for technology companies, helping them to achieve diversity and fairness in their hiring. (The Craig Newmark Foundation and Hollywood’s Time’s Up foundation are among her funders.)

More startlingly, the titans of Silicon Valley are now on the defensive: Facebook is under growing pressure to tackle the disinformation that has swamped its site; Twitter is asterisking the president’s tweets when they don’t meet prescribed standards; and Google now faces a major antitrust suit from the Department of Justice.

Amid this, Pao has emerged as a critical eminence in the tech industry, a visionary who was ahead of her time in trying to tame its worst impulses. Her essays appear in The Washington Post, Time magazine, and Vox’s re/code; and in 2017, she published a memoir, Reset: My Fight for Inclusion and Lasting Change.

As Americans prepared to vote in a historically consequential election—one in which social media and virtual communication amid a pandemic will have had incalculable influence—she and I spoke at length via Zoom. An edited and condensed version of the conversation follows.

Claudia Dreifus: In your writings and speeches, you sometimes describe social media as a kind of Paradise Lost. What do you mean?

Ellen Pao: Well, the social media sites were started with this idea that more conversation was a great thing. There was this grandiose vision that you could have something that unified and connected the world. But the founders of these businesses—Facebook, Twitter—came from, for want of another term, privilege.

They were white men, often with limited life experiences and myopic views. For them, talking on the Internet was a great thing.

When problems started to emerge, they didn’t experience them and so they didn’t take them as seriously as they should have. One of the most obvious was that women, non-binary, Black, Latinx, and indigenous people were being harassed, bullied, and pushed off the Internet. The platforms were hosting these really one-sided conversations that were often racist and misogynistic.

All this was happening at the same moment that these companies were seeking to encourage user engagement. Well, what drives that? It’s often conflict and anger. The platforms became the scene of screaming matches. We got divides instead of bridges. It’s actually how, I think, we ended up with a lot of the white supremacy you see online these days.

Wasn’t white supremacy ubiquitous long before the Internet?

People hid it more. And there was probably less of it around.

What was new was that social media allowed these voices a bigger and bigger platform. White supremacists actually recruited from the sites. At one point somebody wrote that Reddit was the biggest source of recruits for one of the white supremacist groups.

My point is that because of social media, the supremacists stopped hiding. That was a huge change. I blame the platforms for it.

Can a lot of the bad stuff be attributed to the anonymity that’s permitted on the Internet? People don’t have to give their real names or stand behind what they say. Would requiring verifiable real names be helpful?

Advertisement

I think that would have helped, say, eight years ago. Today, people are no longer hiding behind anonymity. We’ve got these parades of white supremacists who are not wearing hoods and are not afraid of being photographed. And they are proudly sharing it on their Facebook feeds. There’s no stigma or shame. I don’t think people care anymore about whether their names are attached to really negative content.

Just this month, after years of lobbying by human rights organizations, Mark Zuckerberg announced he was going to bar Holocaust denial from Facebook. Many wondered what took him so long…

I read that it’s taken them ten years to get this. Two years ago, Mark Zuckerberg said something like, “Hey, everybody has a right to their opinions, and you can have an opinion that the Holocaust didn’t happen.”

The Holocaust is a fact, not an opinion.

Have social media executives been reluctant to curb hate speech on their platforms?

They talk about how they don’t want hate. And they did take down posts from ISIS. But when it comes to white supremacy and some of the other forms of bigotry, it’s sometimes been beneficial for them to have it. It creates more engagement. It drove some users to spend a lot of time on their platforms, which meant you could count on them to create free content and increase your user engagement numbers. These numbers, in turn, could be used to sell ads and raise money from investors.

Why won’t the leadership at the social media companies establish stronger standards for what appears on their sites?

It’s complicated. The executives don’t want to act as if they can control it because they fear that admission could lead to government regulation. If they say, “Yeah, we could tweak these dials and hire some more people to monitor content,” someone might ask, “Well, why aren’t you doing these things?” The fact is that a lot of the sites, Facebook especially, have inconsistent moderation and unclear rules. That’s a problem they could fix.

Frankly, we’ve always had limits on free speech on the platforms. From day one, they got rid of spam. They got rid of child pornography. When I was at Reddit, I led an effort to get rid of unauthorized nude photos and revenge porn. Today, everyone accepts these shouldn’t be allowed. But five years ago, when I proposed it, this was controversial. None of the big platforms had done it because they considered that their role was to be an open space for free speech. But once Reddit led the way, the others—Facebook, Twitter, YouTube, Microsoft—followed.

Did that experience show you that change was possible?

Absolutely. You know, one reason I took on the role of Reddit CEO was because I wanted to do something about harmful content. Reddit had some of the worst on the Internet. I thought, “If Reddit can make changes, then other platforms will follow.” And that’s what happened. The others were just waiting for someone else to take the first step.

After the 2016 election, it was discovered that Facebook had served as a launch pad for disinformation aimed at supporting Donald Trump’s candidacy. A consultancy related to the Trump campaign and funded by Robert Mercer, Cambridge Analytica, used access to entire chains of “friends” to exploit the personal information of up to 87 million Facebook users in order to facilitate micro-targeted political messaging. Facebook initially claimed it was not responsible for the data-mining, though the company was eventually forced to pay a multibillion-dollar fine. But was the obstacle to better protecting user privacy simply a technology issue?

No. I mean, look at Facebook and the resources that it has. They can influence stuff. It’s not as though once something is built into the technology, it’s never changeable. In tech, they are constantly changing their technology and they are constantly paying down what we call “technical debt” which is the cost of fixing unproductive technical problems. If some feature doesn’t solve long-term problems, that’s technical debt. Over time, you try to pay it down and fix it.

Here, they are saying either “This is not a problem” or “We don’t think this is a big enough problem to put on our to-do list.”

I believe that Facebook even sent a consultant to show the data miners how to be more effective with their technology. That fact alone makes you think they were more involved than they’ve admitted.

Advertisement

And I’m sure the consultant charged some kind of fee for that service.

Frankly, the fact that Facebook sells users’ data is part of its problem. It could stop doing that. All you have to do is turn off those application programming interfaces (APIs), and that will shut off access. At Reddit, when I was there, we had the opportunity to sell our data. As I researched it, I saw that once you sell data, there’s no control over what happens to it. We dropped the idea. Reddit now, I believe, is selling data. The current leadership is about driving revenues. You can make a lot of money that way.

Do you think that similar things are happening in the current election campaign?

I don’t know this as a fact, but you can assume they are. As far as I know, Facebook took down two networks for Russian disinformation. In an earlier effort, Facebook removed thirteen accounts. I am surprised by how small the takedowns were and wonder how many were missed. I can’t imagine that they [Russian and other foreign actors] just gave up, but I haven’t heard of more being taken down. Now, at Reddit, people would create new accounts and rebuild their followings in the same way until we created a way to stop that from happening. I haven’t heard of Facebook taking charge that way. I imagine they would have disclosed those removals and I haven’t seen anything about it since the end of September.

You know, every time a problem is identified at Facebook, the leadership claims they’ve changed it. A while ago, ProPublica, I think, exposed how Facebook’s ads could be customized so that they discriminated against Black people looking for housing. The Facebook leadership said they were going to change that. But when people looked again, it hadn’t really changed. Given the history, I don’t believe that Facebook has made changes that would prevent the problems of 2016 from recurring.

Twitter has started labeling some of the president’s statements as possibly misleading. The language in its warning goes, “Some or all of the content shared in this tweet is disputed and may be misleading.” Is that enough?

My frustration with Twitter is that it’s a little bit too late.

Trump himself and his family’s accounts now have millions of followers. I empathize with Twitter’s executives. It’s not easy. When we were making changes at Reddit, we learned that you can’t change the whole thing at once. If you try, you’re not going to be able to maintain your hold over those changes. If you try to get rid of all of the horrible content at one time, people just keep popping up everywhere, and you just can’t enforce your rules enough to make them seem like a rule. You do things slowly, one piece at a time.

Should they kick the president off the platform?

You know, in early 2017, Laura Gómez and I published a piece in re/code where we said, more or less, “Hey Twitter, you should just kick Trump off your platform. He is violating every rule and encouraging harassment.”

When you have someone with such visibility violating rules, they are teaching that rules don’t matter. The way Twitter worked, Trump was getting rewarded with more users, more views, more publicity for his content. His actions encouraged others to try to find out how far can they go because his was a successful path. My frustration with what Twitter is doing is that it’s a little bit too late—particularly when it comes to limiting hate speech and harassment. Some of the worst accounts now have millions of followers.

One thing Twitter could do right now is to stop promoting some pretty bad accounts. Every day, I get an email from them promoting an Eric Trump account. They don’t necessarily have to ban his account, but they certainly don’t have to amplify it.

Did trolling and online harassment increase during Donald Trump’s presidency?

I’m sure it did. Again and again, his social media actions drove waves of attention and violated Twitter’s rules. In doing that, he encouraged others to do the same. You ended up with worse and worse behavior on that platform, and, I think, in the real world.

I don’t say that lightly. The way social media works is that bad behavior gets rewarded. The sites are designed to amplify conflict and outrage. It’s why Trump tweets the way he does. Every time he posts something outrageous, it gets retweeted, liked, and shared—it amplifies the original message and widens its reach. The media will cover it, too. All of a sudden, there’s a whole news cycle around a tweet instead of some of the actual problems of the country.

What do you make of Melania Trump, the First Lady, designating online bullying as her signature issue? Is she trolling us—like when she went to inspect immigrant detention centers and wore a coat that said “I really don’t care. Do U?”

I don’t understand that. And I don’t try to analyze it. I haven’t seen that she’s done much. I’ve seen some PR messaging, but I haven’t seen anything concrete.

I don’t believe she’s met with the leaders of any of the platforms. I don’t think she’s met with Common Sense Media, the Anti-Defamation League, or any of the organizations working to combat online bullying and hate. I’m not really sure what she’s done other than make a couple of press announcements.

The New York Post recently published a story based on unverified emails purporting to have come from Hunter Biden’s laptop. Perhaps recalling how the platform was, in 2016, a center for pro-Trump disinformation, Twitter brass blocked links to the report. Then, after some Republican senators threatened an investigation, Twitter’s Jack Dorsey changed his mind, apologized, and claimed he’d made a mistake. What was that?

I think it was fear of regulation. At Twitter, they were probably trying to balance their own desire to prevent possible misinformation from impacting the election with being able to run their business as they always have.

This fear of regulation is what drives a lot of policy change in Silicon Valley. This whole thing really goes back to Silicon Valley’s executives needing to understand what they want for their companies. Is it clear rules that people understand and can follow?

When you’re all over the place, it makes it very hard for people to understand what they are allowed to do and what they aren’t. I do think that if you’ve been doing a bad job of running your business, you do need to change. And if you can’t do it in a good way, you’re going to get regulated anyway.

Some Silicon Valley leaders argue that the best way to combat bad speech is more speech. Are they right?

Frankly, I think the platforms choose to promote free speech whenever it’s comfortable or convenient. Right now, they’re in muddy water, facing anger from users, politicians, and even their employees.

Everybody knows we need to take some content down. You can’t have people posting real-time shootings on Facebook Live. This brings public outrage and new calls for regulation. It’s why the executives come up with these convoluted rationalizations for what they are doing.

You’ve been accused of promoting censorship. How do you answer the charge?

I deny it. My hope is to get more voices onto the platforms, not fewer. It’s about giving every voice an opportunity to speak. When you are pushing voices off the platform and only the loudest voices are given space, you end up censoring everyone else. Right now, you have, in effect, censorship. It’s just not explicit.

What do you think the tech world will look like after November 3?

Of course, I hope Biden wins. I have my fears about what the world will look like afterwards. It’s hard to imagine that in either scenario we’ll return to a calm functioning society.

My hope is that after Biden wins, the tech leaders will feel more comfortable with managing a lot of the hate on their sites. I don’t know if that can happen, because they are just so locked into increasing engagement and pushing people to be more and more enraged to generate that.

To return to my first question: when you first went to Silicon Valley in the 1990s, did you think it would become this swamp?

It’s interesting because tech was supposed to be this wonderful, good, positive thing—and it ended up being so toxic. The opposite of what we aspired for it. Many people of my generation went to Silicon Valley because we wanted to change the world, make it a better place.

It’s sad. What we hoped would become a unifier of society fragmented it even more.